Computing

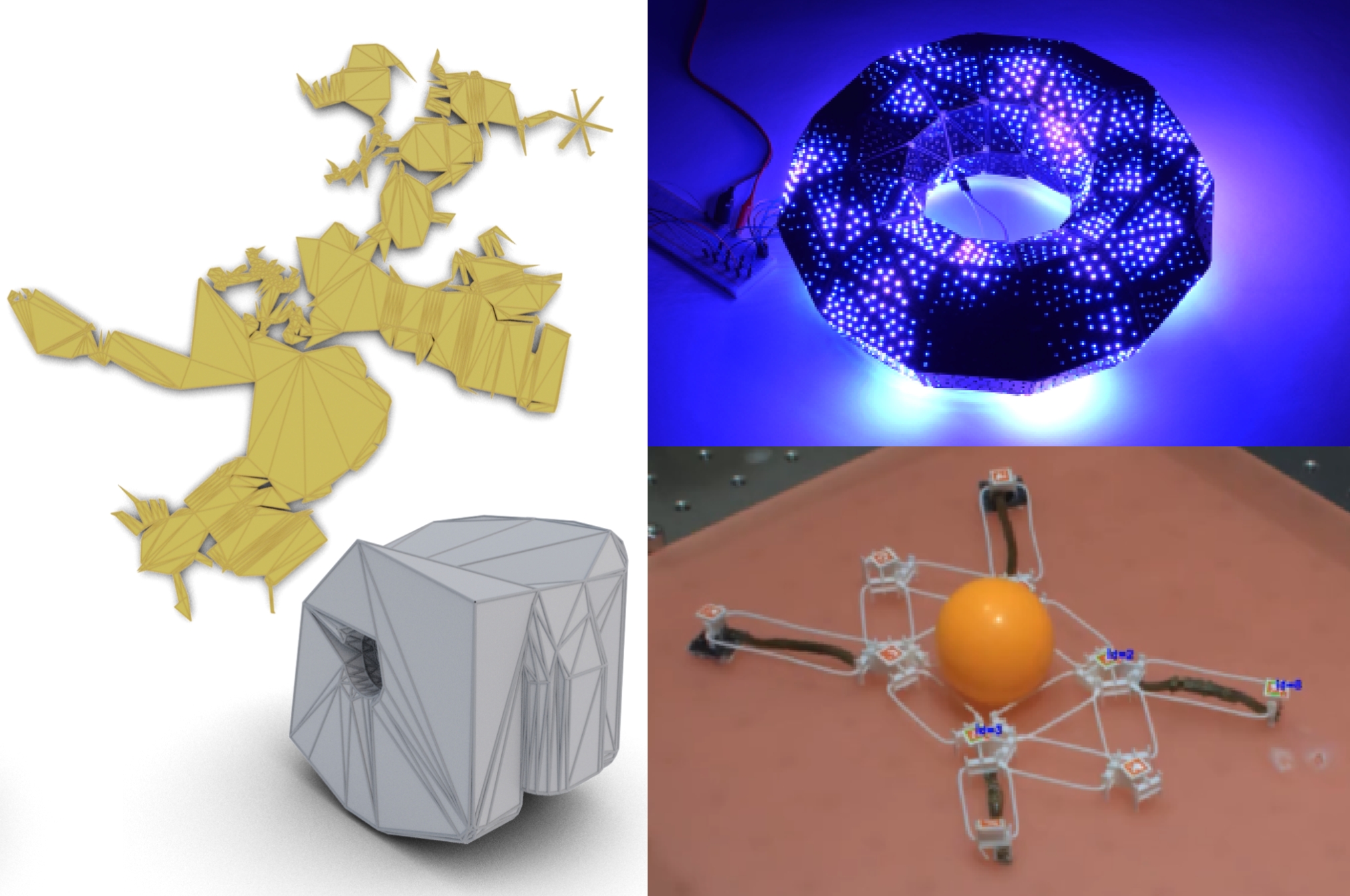

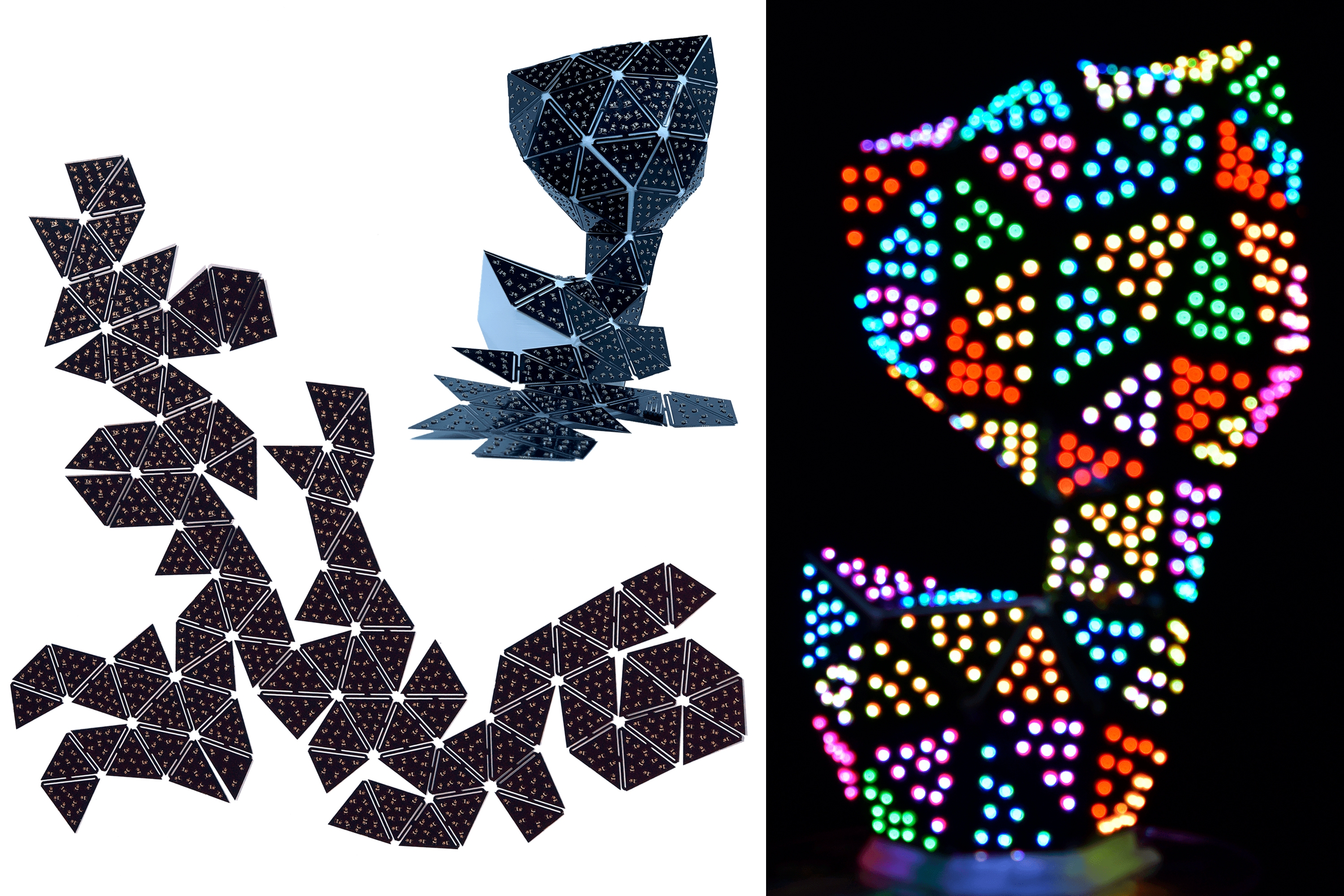

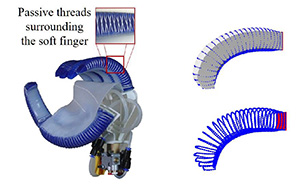

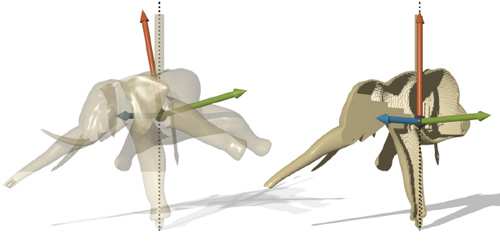

Complex 3D shapes can be created by morphing flat 2D configurations. Such deformations either preserve the intrinsic material geometry (e.g., folding paper) or modify it through localized contraction. Once transformed, the 3D shape can be further controlled to achieve a target functionality. A key challenge is to take the material specifications and the actuation process as input to automatically design the target 3D shape and its functionality. This thesis presents two novel computational pipelines for the design and control of shape-morphing structures used to create functional prototypes. The first pipeline borrows from the art of origami to fold paper into intricate shapes and applies this principle to make 3D lighting displays. We introduce, PCBend a computational design approach that covers a surface with individually addressable RGB LEDs, effectively forming a low-resolution surface by folding rigid printed circuit boards (PCBs). We optimize cut patterns on PCBs to act as hinges and co-design LED placement, circuit routing, and fabrication constraints to produce PCB blueprints. The PCBs are fabricated using automated standard manufacturing services with LEDs embedded on them. Finally, the fabricated PCBs are cut along the contour and folded onto a 3D-printed support. The 3D lighting display is then controlled to display complex surface light patterns. Creating 3D shapes through folding is only possible if their planar configuration, called ”unfolding” exists without any distortion or overlap. Existing methods often permit distortion or require multiple patches, which are unsuitable for fabrication pipelines that rely on folding non-stretchable materials. We reinforce such fabrication pipelines by providing a geometric relaxation to the problem, where the input shape is modified to admit overlap-free unfolding. The second fabrication pipeline extends shape morphing to soft robotics by emulating nature’s blueprint of distributed actuation. Inspired by vertebrates, we build musculoskeletal robots using modular active actuators, employing Liquid Crystal Elastomers (LCEs) as shrinkable artificial muscles integrated with 3D-printed bones. The chemical composition of LCEs is altered to enable untethered actuation through infrared radiation, allowing active control of individual muscles and their corresponding bones. The combined motion of individual bones defines the robot’s overall shape and functionality. Our proposed system significantly expands both the design and control spaces of soft robots, which we harness using our computational design tools. We build several physical robots that exhibit complex shape morphing and varied terrain navigation, showcasing the versatility of our pipeline. This thesis explores applications ranging from intricate light patterns displayed on 3D shapes formed by folding rigid PCBs to untethered robots that use contractile muscles to exhibit shape morphing and locomotion. Through these examples, the thesis highlights how computational design and distributed actuation, integrated with novel materials, can transform passive structures into functional prototypes.

@phdthesis{BhargavaThesis2025,

author = {Manas Bhargava},

title = {Design and control of deformable structures: From PCB lighting displays to elastomer robots},

school = {ISTA},

year = {2025},

month = {9}

}

Computer Graphics Forum (Eurographics 2025)

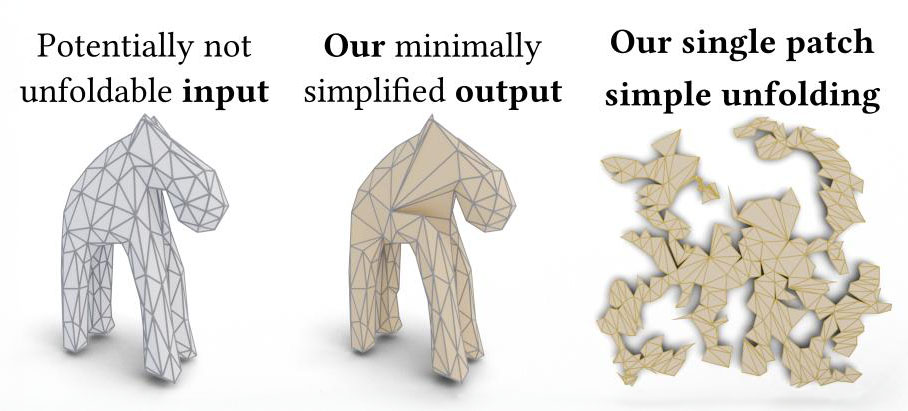

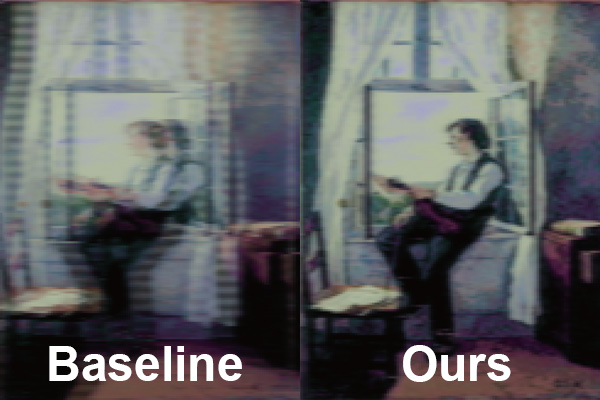

We present a computational approach for unfolding 3D shapes isometrically into the plane as a single patch without overlapping triangles. This is a hard, sometimes impossible, problem, which existing methods are forced to soften by allowing for map distortions or multiple patches. Instead, we propose a geometric relaxation of the problem: we modify the input shape until it admits an overlap-free unfolding. We achieve this by locally displacing vertices and collapsing edges, guided by the unfolding process. We validate our algorithm quantitatively and qualitatively on a large dataset of complex shapes and show its proficiency by fabricating real shapes from paper.

@article{https://doi.org/10.1111/cgf.15269,

author = {Bhargava, M. and Schreck, C. and Freire, M. and Hugron, P. A. and Lefebvre, S. and Sellán, S. and Bickel, B.},

title = {Mesh Simplification for Unfolding},

journal = {Computer Graphics Forum},

volume = {n/a},

number = {n/a},

pages = {e15269},

keywords = {fabrication, single patch unfolding, mesh simplification},

doi = {https://doi.org/10.1111/cgf.15269},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1111/cgf.15269},

eprint = {https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.15269},

}

ACM Transactions on Graphics (Siggraph Asia 2023)

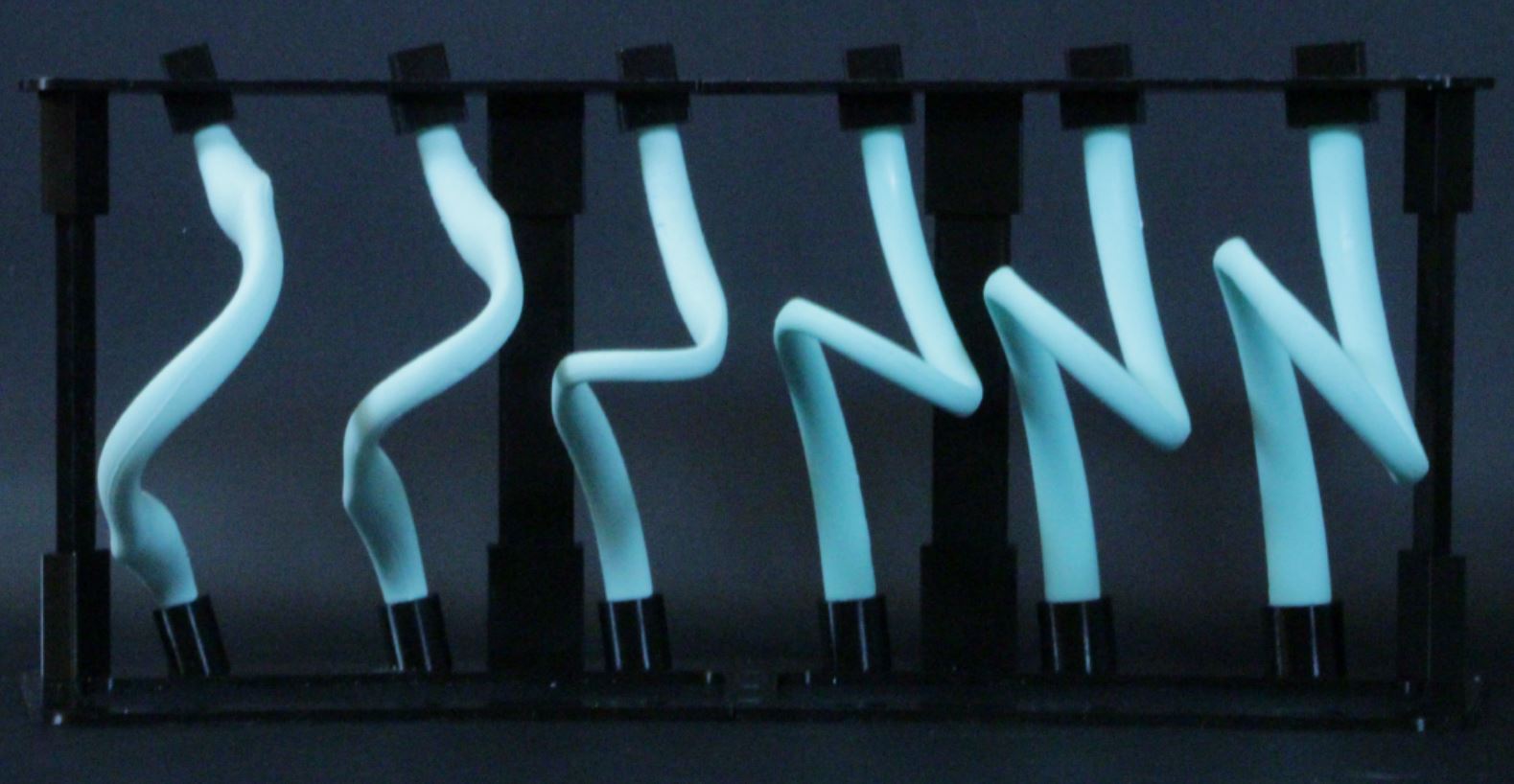

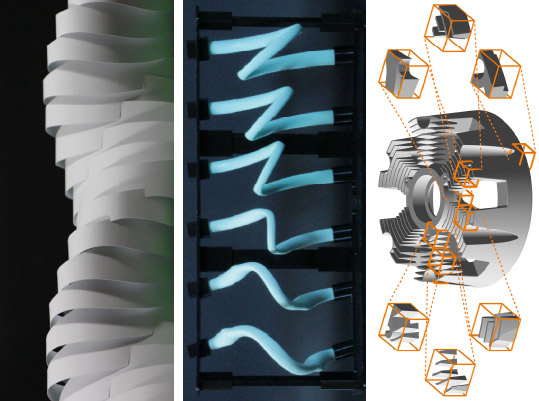

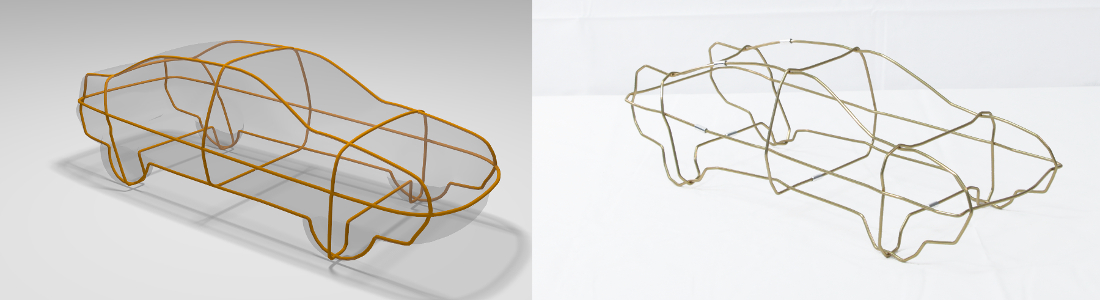

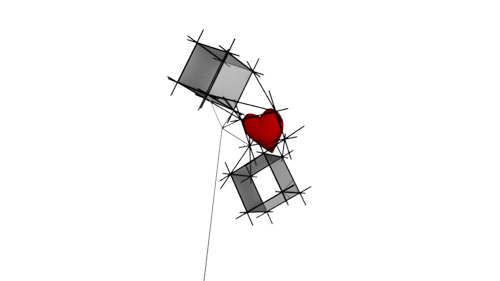

The Kirchhoff rod model describes the bending and twisting of slender elastic rods in three dimensions, and has been widely studied to enable the prediction of how a rod will deform, given its geometry and boundary conditions. In this work, we study a number of inverse problems with the goal of computing the geometry of a straight rod that will automatically deform to match a curved target shape after attaching its endpoints to a support structure. Our solution lets us finely control the static equilibrium state of a rod by varying the cross-sectional profiles along its length. We also show that the set of physically realizable equilibrium states admits a concise geometric description in terms of linear line complexes, which leads to very efficient computational design algorithms. Implemented in an interactive software tool, they allow us to convert three-dimensional hand-drawn spline curves to elastic rods, and give feedback about the feasibility and practicality of a design in real time. We demonstrate the efficacy of our method by designing and manufacturing several physical prototypes with applications to interior design and soft robotics.

@article{KirchhoffHafner23,

author = {Hafner, Christian and Bickel, Bernd},

title = {The Design Space of Kirchhoff Rods},

year = {2023},

issue_date = {October 2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {42},

number = {5},

issn = {0730-0301},

url = {https://doi.org/10.1145/3606033},

doi = {10.1145/3606033},

journal = {ACM Trans. Graph.},

month = {sep},

articleno = {171},

numpages = {20},

keywords = {Kirchhoff Rods, Digital Fabrication, Active Bending, Computational Design}

}

ACM Transactions on Graphics (Siggraph 2023)

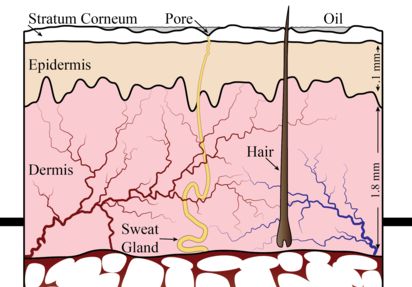

Tattoos are a highly popular medium, with both artistic and medical applications. Although the mechanical process of tattoo application has evolved historically, the results are reliant on the artisanal skill of the artist. This can be especially challenging for some skin tones, or in cases where artists lack experience. We provide the first systematic overview of tattooing as a computational fabrication technique. We built an automated tattooing rig and a recipe for the creation of silicone sheets mimicking realistic skin tones, which allowed us to create an accurate model predicting tattoo appearance. This enables several exciting applications including tattoo previewing, color retargeting, novel ink spectra optimization, color-accurate prosthetics, and more.

@inproceedings{Piovarci2023,

author = { Michal Piovar\v{c}i and Alexandre Chapiro] and Bernd Bickel},

title = {Skin-Screen: A Computational Fabrication Framework for Color Tattoos},

journal = {ACM Transactions on Graphics (Proc. SIGGRAPH)},

year = {2023},

volume = {42},

number = {4}

}

ACM Transactions on Graphics (Siggraph 2023)

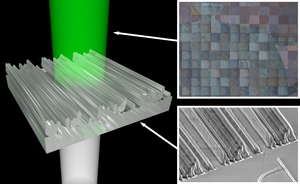

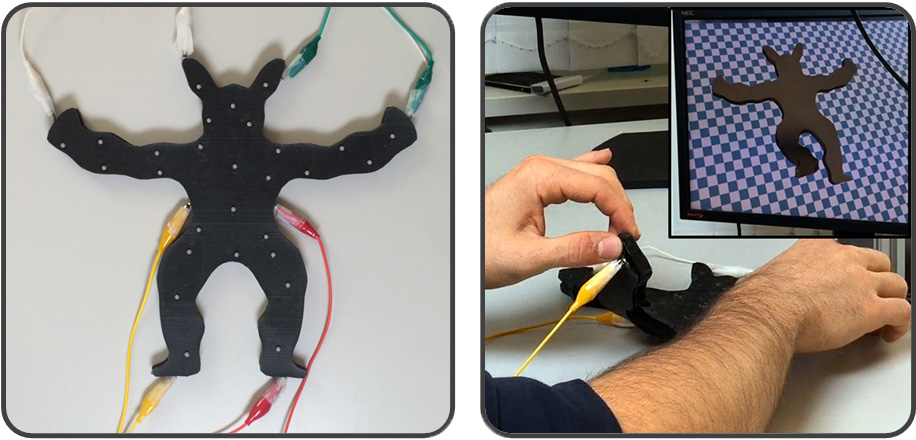

We propose a computational design approach for covering a surface with individually addressable RGB LEDs, effectively forming a low-resolution surface screen. To achieve a low-cost and scalable approach, we propose creating designs from flat PCB panels bent in-place along the surface of a 3D printed core. Working with standard rigid PCBs enables the use of established PCB manufacturing services, allowing the fabrication of designs with several hundred LEDs. Our approach optimizes the PCB geometry for folding, and then jointly optimizes the LED packing, circuit and routing, solving a challenging layout problem under strict manufacturing requirements. Unlike paper, PCBs cannot bend beyond a certain point without breaking. Therefore, we introduce parametric cut patterns acting as hinges, designed to allow bending while remaining compact. To tackle the joint optimization of placement, circuit and routing, we propose a specialized algorithm that splits the global problem into one sub-problem per triangle, which is then individually solved. Our technique generates PCB blueprints in a completely automated way. After being fabricated by a PCB manufacturing service, the boards are bent and glued by the user onto the 3D printed support. We demonstrate our technique on a range of physical models and virtual examples, creating intricate surface light patterns from hundreds of LEDs.

@article{freire:hal-04129354,

TITLE = {PCBend: Light Up Your 3D Shapes With Foldable Circuit Boards},

AUTHOR = {Freire, Marco and Bhargava, Manas and Schreck, Camille and Hugron, Pierre-Alexandre and Bickel, Bernd and Lefebvre, Sylvain},

URL = {https://inria.hal.science/hal-04129354},

JOURNAL = {ACM Transactions on Graphics},

PUBLISHER = {Association for Computing Machinery},

YEAR = {2023},

DOI = {10.1145/3592411},

KEYWORDS = {PCB design ; PCB bending ; automated placement and routing ; 3D electronics},

PDF = {https://inria.hal.science/hal-04129354/file/main.pdf},

HAL_ID = {hal-04129354},

HAL_VERSION = {v1},

}

ACM Siggraph 2023 Conference Papers

We present a technique to optimize the reflectivity of a surface while preserving its overall shape. The naïve optimization of the mesh vertices using the gradients of reflectivity simulations results in undesirable distortion. In contrast, our robust formulation optimizes the surface normal as an independent variable that bridges the reflectivity term with differential rendering, and the regularization term with as-rigid-as-possible elastic energy. We further adaptively subdivide the input mesh to improve the convergence. Consequently, our method can minimize the retroreflectivity of a wide range of input shapes, resulting in sharply creased shapes ubiquitous among stealth aircraft and Sci-Fi vehicles. Furthermore, by changing the reward for the direction of the outgoing light directions, our method can be applied to other reflectivity design tasks, such as the optimization of architectural walls to concentrate light in a specific region. We have tested the proposed method using light-transport simulations and real-world 3D-printed objects.

@inproceedings{Tojo2023Stealth,

title = {Stealth Shaper: Reflectivity Optimization as Surface Stylization},

author = {Tojo, Kenji and Shamir, Ariel and Bickel, Bernd and Umetani, Nobuyuki},

booktitle = {ACM SIGGRAPH 2023 Conference Proceedings},

year = {2023},

series = {SIGGRAPH '23},

}

ACM Siggraph 2023 Conference Papers

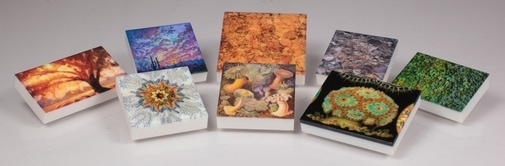

Color and gloss are fundamental aspects of surface appearance. State-of-the-art fabrication techniques can manipulate both properties of the printed 3D objects. However, in the context of appearance reproduction, perceptual aspects of color and gloss are usually handled separately, even though previous perceptual studies suggest their interaction. Our work is motivated by previous studies demonstrating a perceived color shift due to a change in the object’s gloss, i.e., two samples with the same color but different surface gloss appear as they have different colors. In this paper, we conduct new experiments which support this observation and provide insights into the magnitude and direction of the perceived color change. We use the observations as guidance to design a new method that estimates and corrects the color shift enabling the fabrication of objects with the same perceived color but different surface gloss. We formulate the problem as an optimization procedure solved using differentiable rendering. We evaluate the effectiveness of our method in perceptual experiments with 3D objects fabricated using a multi-material 3D printer and demonstrate potential applications.

@inproceedings{Condor2023,

author = { Jorge Condor and Michal Piovar\v{c}i and Bernd Bickel and Piotr Didyk},

title = {Gloss-aware Color Correction for 3D Printing},

booktitle = {SIGGRAPH 2023 Conference Papers},

year = {2023},

}

3D printing based on continuous deposition of materials, such as filament-based 3D printing, has seen widespread adoption thanks to its versatility in working with a wide range of materials. An important shortcoming of this type of technology is its limited multi-material capabilities. While there are simple hardware designs that enable multi-material printing in principle, the required software is heavily underdeveloped. A typical hardware design fuses together individual materials fed into a single chamber from multiple inlets before they are deposited. This design, however, introduces a time delay between the intended material mixture and its actual deposition. In this work, inspired by diverse path planning research in robotics, we show that this mechanical challenge can be addressed via improved printer control. We propose to formulate the search for optimal multi-material printing policies in a reinforcement learning setup. We put forward a simple numerical deposition model that takes into account the non-linear material mixing and delayed material deposition. To validate our system we focus on color fabrication, a problem known for its strict requirements for varying material mixtures at a high spatial frequency. We demonstrate that our learned control policy outperforms state-of-the-art hand-crafted algorithms.

@article{zhenyuan2023embroidery,

journal = {Computer Graphics Forum},

title = {Directionality-Aware Design of Embroidery Patterns},

author = {Zhenyuan, Liu and Piovar\v{c}i, Michal and Hafner, Christian and Charrondi\`{e}re, Rapha\"{e}l and Bickel, Bernd},

year = {2023},

volume = {42},

number = {2},

publisher = {The Eurographics Association and John Wiley & Sons Ltd.},

}

Computer Graphics Forum (Eurographics 2023)

Embroidery is a long-standing and high-quality approach to making logos and images on textiles. Nowadays, it can also be performed via automated machines that weave threads with high spatial accuracy. A characteristic feature of the appearance of the threads is a high degree of anisotropy. The anisotropic behavior is caused by depositing thin but long strings of thread. As a result, the stitched patterns convey both color and direction. Artists leverage this anisotropic behavior to enhance pure color images with textures, illusions of motion, or depth cues. However, designing colorful embroidery patterns with prescribed directionality is a challenging task, one usually requiring an expert designer. In this work, we propose an interactive algorithm that generates machine-fabricable embroidery patterns from multi-chromatic images equipped with user-specified directionality fields. We cast the problem of finding a stitching pattern into vector theory. To find a suitable stitching pattern, we extract sources and sinks from the divergence field of the vector field extracted from the input and use them to trace streamlines. We further optimize the streamlines to guarantee a smooth and connected stitching pattern. The generated patterns approximate the color distribution constrained by the directionality field. To allow for further artistic control, the trade-off between color match and directionality match can be interactively explored via an intuitive slider. We showcase our approach by fabricating several embroidery paths.

@article{zhenyuan2023embroidery,

journal = {Computer Graphics Forum},

title = {Directionality-Aware Design of Embroidery Patterns},

author = {Zhenyuan, Liu and Piovar\v{c}i, Michal and Hafner, Christian and Charrondi\`{e}re, Rapha\"{e}l and Bickel, Bernd},

year = {2023},

volume = {42},

number = {2},

publisher = {The Eurographics Association and John Wiley & Sons Ltd.},

}

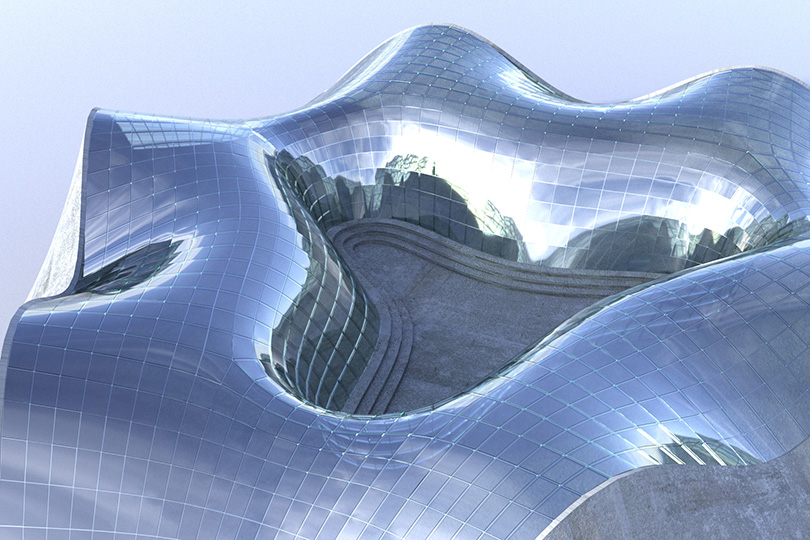

Inverse design problems in fabrication-aware shape optimization are typically solved on discrete representations such as polygonal meshes. This thesis argues that there are benefits to treating these problems in the same domain as human designers, namely, the parametric one. One reason is that discretizing a parametric model usually removes the capability of making further manual changes to the design, because the human intent is captured by the shape parameters. Beyond this, knowledge about a design problem can sometimes reveal a structure that is present in a smooth representation, but is fundamentally altered by discretizing. In this case, working in the parametric domain may even simplify the optimization task. We present two lines of research that explore both of these aspects of fabrication-aware shape optimization on parametric representations.

The first project studies the design of plane elastic curves and Kirchhoff rods, which are common mathematical models for describing the deformation of thin elastic rods such as beams, ribbons, cables, and hair. Our main contribution is a characterization of all curved shapes that can be attained by bending and twisting elastic rods having a stiffness that is allowed to vary across the length. Elements like these can be manufactured using digital fabrication devices such as 3d printers and digital cutters, and have applications in free-form architecture and soft robotics.

We show that the family of curved shapes that can be produced this way admits geometric description that is concise and computationally convenient. In the case of plane curves, the geometric description is intuitive enough to allow a designer to determine whether a curved shape is physically achievable by visual inspection alone. We also present shape optimization algorithms that convert a user-defined curve in the plane or in three dimensions into the geometry of an elastic rod that will naturally deform to follow this curve when its endpoints are attached to a support structure. Implemented in an interactive software design tool, the rod geometry is generated in real time as the user edits a curve and enables fast prototyping.

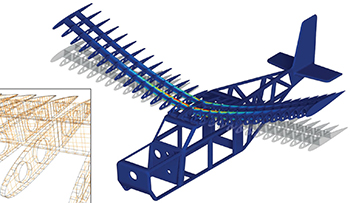

The second project tackles the problem of general-purpose shape optimization on CAD models using a novel variant of the extended finite element method (XFEM). Our goal is the decoupling between the simulation mesh and the CAD model, so no geometry-dependent meshing or remeshing needs to be performed when the CAD parameters change during optimization. This is achieved by discretizing the embedding space of the CAD model, and using a new high-accuracy numerical integration method to enable XFEM on free-form elements bounded by the parametric surface patches of the model. Our simulation is differentiable from the CAD parameters to the simulation output, which enables us to use off-the-shelf gradient-based optimization procedures. The result is a method that fits seamlessly into the CAD workflow because it works on the same representation as the designer, enabling the alternation of manual editing and fabrication-aware optimization at will.

@phdthesis{HafnerThesis2023,

author = {Christian Hafner},

title = {Inverse Shape Design with Parametric Representations: Kirchhoff Rods and Parametric Surface Models},

school = {ISTA},

year = {2023},

month = {5}

}

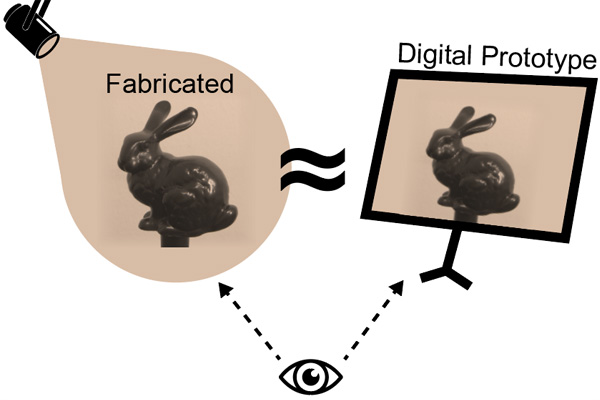

ACM Siggraph Asia 2022 Conference Papers

A good match of material appearance between real-world objects and their digital on-screen representations is critical for many applications such as fabrication, design, and e-commerce. However, faithful appearance reproduction is challenging, especially for complex phenomena, such as gloss. In most cases, the view-dependent nature of gloss and the range of luminance values required for reproducing glossy materials exceeds the current capabilities of display devices. As a result, appearance reproduction poses significant problems even with accurately rendered images. This paper studies the gap between the gloss perceived from real-world objects and their digital counterparts. Based on our psychophysical experiments on a wide range of 3D printed samples and their corresponding photographs, we derive insights on the influence of geometry, illumination, and the display’s brightness and measure the change in gloss appearance due to the display limitations. Our evaluation experiments demonstrate that using the prediction to correct material parameters in a rendering system improves the match of gloss appearance between real objects and their visualization on a display device.

@inproceedings{Chen2022,

author = {Chen, Bin and Piovar\v{c}i, Michal and Wang, Chao and Seidel, Hans-Peter and Didyk, Piotr and Myszkowski, Karol and Serrano, Ana},

title = {Gloss Management for Consistent Reproduction of Real and Virtual Objects},

year = {2022},

isbn = {9781450394703},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3550469.3555406},

doi = {10.1145/3550469.3555406},

booktitle = {SIGGRAPH Asia 2022 Conference Papers},

articleno = {35},

numpages = {9},

keywords = {glossiness reproduction, glossiness perception},

location = {Daegu, Republic of Korea},

series = {SA '22}

}

British Machine Vision Conference (BMVC) 2022

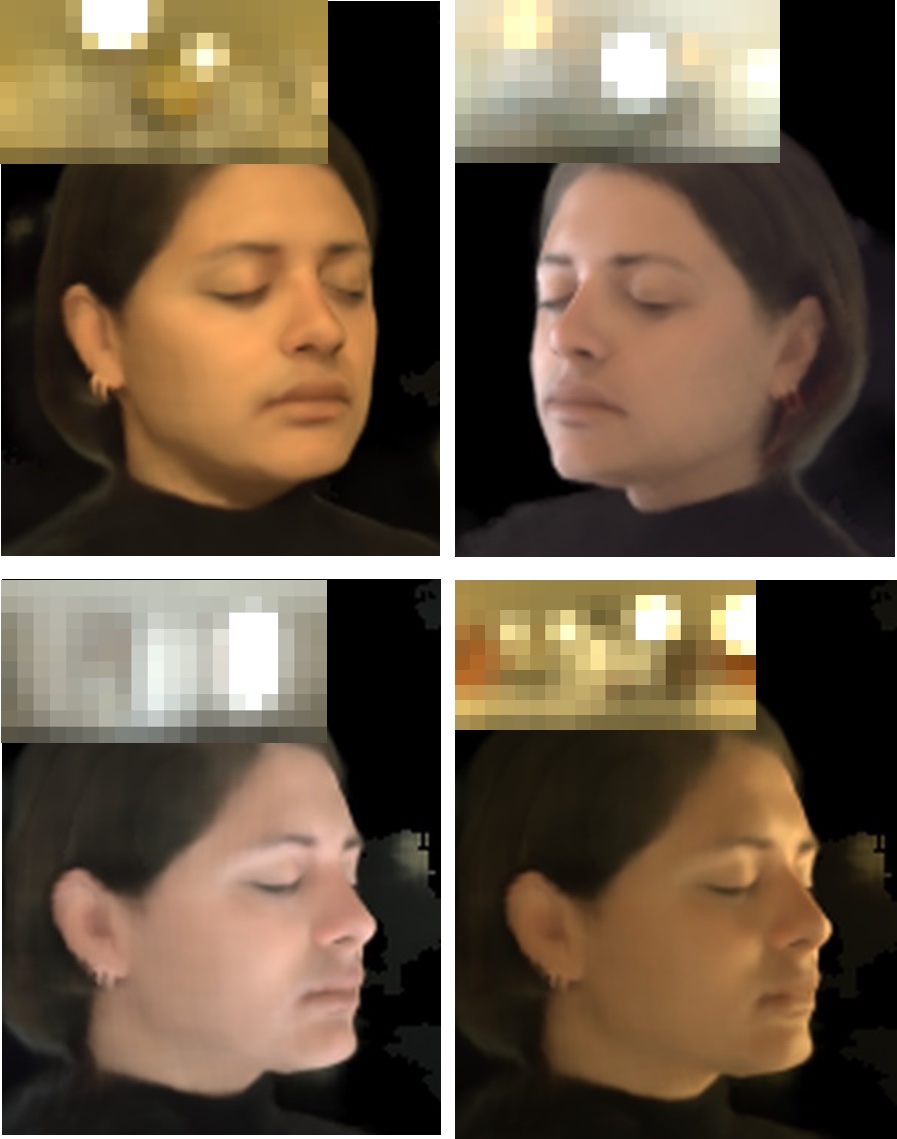

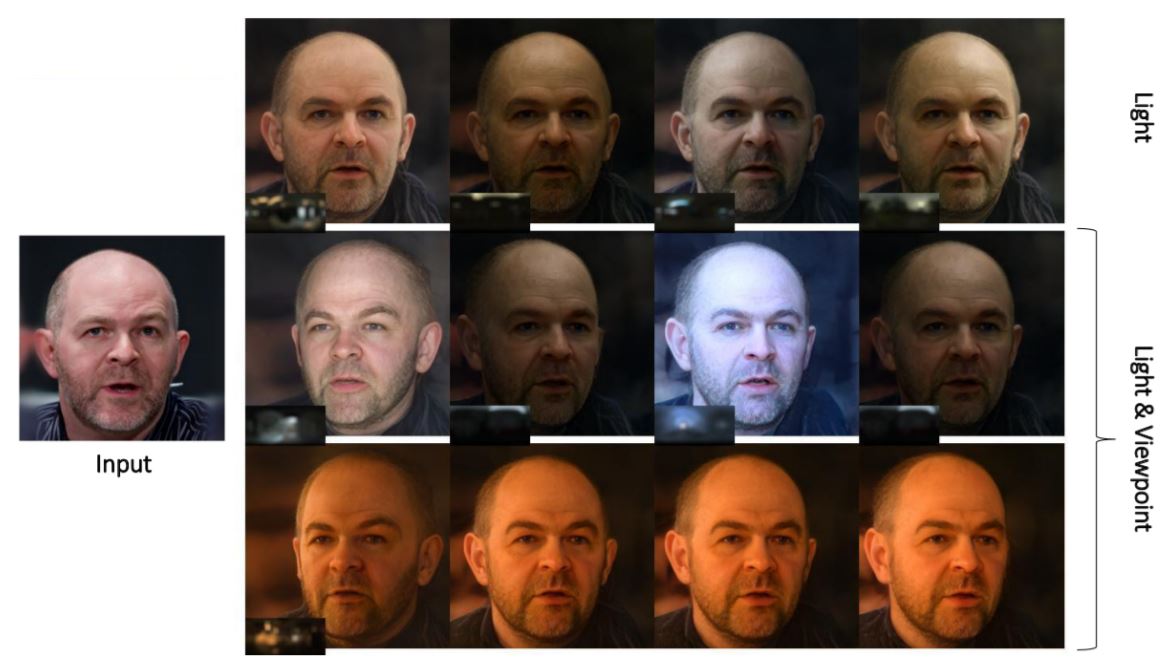

Portrait viewpoint and illumination editing is an important problem with several applications in VR/AR, movies, and photography. Comprehensive knowledge of geometry and illumination is critical for obtaining photorealistic results. Current methods are unable to explicitly model in 3D while handing both viewpoint and illumination editing from a single image. In this paper, we propose VoRF, a novel approach that can take evena single portrait image as input and relight human heads under novel illuminations that can be viewed from arbitrary viewpoints. VoRF represents a human head as a continuous volumetric field and learns a prior model of human heads using a coordinate-based MLP with individual latent spaces for identity and illumination. The prior model is learnt in an auto-decoder manner over a diverse class of head shapes and appearances, allowing VoRF to generalize to novel test identities from a single input image. Additionally, VoRF has a reflectance MLP that uses the intermediate features of the prior model for rendering One-Light-at-A-Time (OLAT) images under novel views. We synthesize novel illuminations by combining these OLAT images with target environment maps. Qualitative and quantitative evaluations demonstrate the effectiveness of VoRF for relighting and novel view synthesis, even when applied to unseen subjects under uncontrolled illuminations.

Computer Graphics Forum (2022)

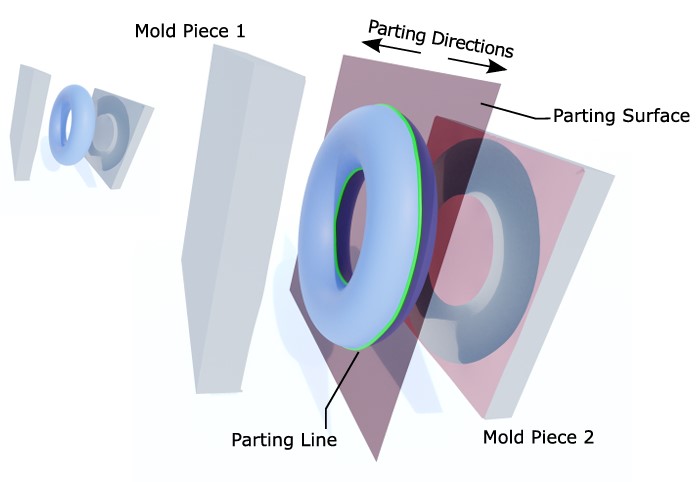

Moulding refers to a set of manufacturing techniques in which a mould, usually a cavity or a solid frame, is used to shape a liquid or pliable material into an object of the desired shape. The popularity of moulding comes from its effectiveness, scalability and versatility in terms of employed materials. Its relevance as a fabrication process is demonstrated by the extensive literature covering different aspects related to mould design, from material flow simulation to the automation of mould geometry design. In this state-of-the-art report, we provide an extensive review of the automatic methods for the design of moulds, focusing on contributions from a geometric perspective. We classify existing mould design methods based on their computational approach and the nature of their target moulding process. We summarize the relationships between computational approaches and moulding techniques, highlighting their strengths and limitations. Finally, we discuss potential future research directions.

ACM Transactions on Graphics (Siggraph 2022)

Enabling additive manufacturing to employ a wide range of novel, functional materials can be a major boost to this technology. However, making such materials printable requires painstaking trial-and-error by an expert operator, as they typically tend to exhibit peculiar rheological or hysteresis properties. Even in the case of successfully finding the process parameters, there is no guarantee of print-to-print consistency due to material differences between batches. These challenges make closed-loop feedback an attractive option where the process parameters are adjusted on-the-fly. There are several challenges for designing an efficient controller: the deposition parameters are complex and highly coupled, artifacts occur after long time horizons, simulating the deposition is computationally costly, and learning on hardware is intractable. In this work, we demonstrate the feasibility of learning a closed-loop control policy for additive manufacturing using reinforcement learning. We show that approximate, but efficient, numerical simulation is sufficient as long as it allows learning the behavioral patterns of deposition that translate to real-world experiences. In combination with reinforcement learning, our model can be used to discover control policies that outperform baseline controllers. Furthermore, the recovered policies have a minimal sim-to-real gap. We showcase this by applying our control policy in-vivo on a single-layer printer using low and high viscosity materials.

@article{Piovarci2022,

title = {Closed-Loop Control of Direct Ink Writing via Reinforcement Learning},

author = {Michal Piovar\v{c}i and Michael Foshey and Jie Xu and Timothy Erps and Vahid Babaei and Piotr Didyk and Szymon Rusinkiewicz and Wojciech Matusik and Bernd Bickel},

year = {2022},

journal = {ACM Transactions on Graphics (Proc. SIGGRAPH)},

volume = {41},

number = {4}

}

ACM Transactions on Graphics (Siggraph 2022)

Interlocking puzzles are intriguing geometric games where the puzzle pieces are held together based on their geometric arrangement, preventing the puzzle from falling apart. High-level-of-difficulty, or simply high-level, interlocking puzzles are a subclass of interlocking puzzles that require multiple moves to take out the first subassembly from the puzzle. Solving a high-level interlocking puzzle is a challenging task since one has to explore many different configurations of the puzzle pieces until reaching a configuration where the first subassembly can be taken out. Designing a high-level interlocking puzzle with a user-specified level of difficulty is even harder since the puzzle pieces have to be interlocking in all the configurations before the first subassembly is taken out. In this paper, we present a computational approach to design high-level interlocking puzzles. The core idea is to represent all possible configurations of an interlocking puzzle as well as transitions among these configurations using a rooted, undirected graph called a disassembly graph and leverage this graph to find a disassembly plan that requires a minimal number of moves to take out the first subassembly from the puzzle. At the design stage, our algorithm iteratively constructs the geometry of each puzzle piece to expand the disassembly graph incrementally, aiming to achieve a user-specified level of difficulty. We show that our approach allows efficient generation of high-level interlocking puzzles of various shape complexities, including new solutions not attainable by state-of-the-art approaches.

@article{Chen-2022-HighLevelPuzzle,

title = {Computational Design of High-level Interlocking Puzzles},

author = {Rulin Chen and Ziqi Wang and Peng Song and Bernd Bickel},

year = {2022},

journal = {ACM Transactions on Graphics (Proc. SIGGRAPH)},

volume = {41},

number = {4}

}

Computer Graphics Forum (Eurographics 2022)

We study structural rigidity for assemblies with mechanical joints. Existing methods identify whether an assembly is structurally rigid by assuming parts are perfectly rigid. Yet, an assembly identified as rigid may not be that “rigid” in practice, and existing methods cannot quantify how rigid an assembly is. We address this limitation by developing a new measure, worst-case rigidity, to quantify the rigidity of an assembly as the largest possible deformation that the assembly undergoes for arbitrary external loads of fixed magnitude. Computing worst-case rigidity is non-trivial due to non-rigid parts and different joint types. We encode parts and their connections into a stiffness matrix, in which parts are modeled as deformable objects and joints as soft constraints. Based on this, we formulate worst-case rigidity analysis as an optimization that seeks the worst-case deformation of an assembly for arbitrary external loads. Furthermore, we present methods to optimize the geometry and topology of various assemblies to enhance their rigidity, as guided by our rigidity measure. We validate our analysis and optimization on various assembly structures with fabrication.

@article{liu2022rigidity,

title = {Worst-Case Rigidity Analysis and Optimization for Assemblies with Mechanical Joints},

author = {Zhenyuan Liu and Jingyu Hu and Hao Xu and Peng Song and Ran Zhang and Bernd Bickel and Chi-Wing Fu},

year = {2022},

journal = {Computer Graphics Forum},

volume = {41},

number = {2},

doi = {10.1111/cgf.14490}

}

ACM Transactions on Graphics 40(6) (SIGGRAPH Asia 2021)

We introduce a novel technique to automatically decompose an input object’s volume into a set of parts that can be represented by two opposite height fields. Such decomposition enables the manufacturing of individual parts using two-piece reusable rigid molds. Our decomposition strategy relies on a new energy formulation that utilizes a pre-computed signal on the mesh volume representing the accessibility for a predefined set of extraction directions. Thanks to this novel formulation, our method allows for efficient optimization of a fabrication-aware partitioning of volumes in a completely automatic way. We demonstrate the efficacy of our approach by generating valid volume partitionings for a wide range of complex objects and physically reproducing several of them.

@article{alderighi2021volume,

author = {Alderighi, Thomas and Malomo, Luigi and Bickel, Bernd and Cignoni, Paolo and Pietroni, Nico},

title = {Volume decomposition for two-piece rigid casting},

journal = {ACM Transactions on Graphics (TOG)},

number = {6},

volume = {40},

year = {2021},

publisher = {ACM}

}

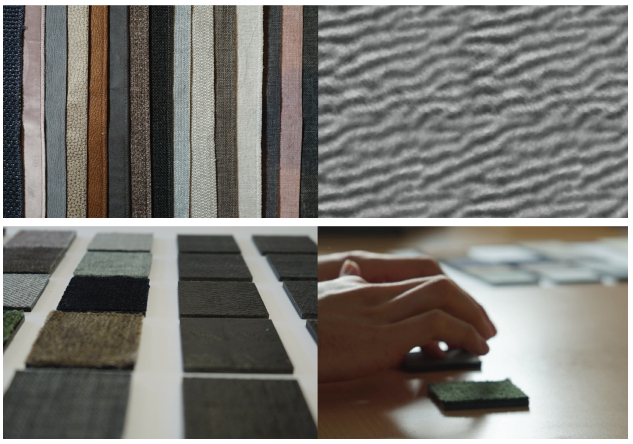

Tactile feedback of an object’s surface enables us to discern its material properties and affordances. This understanding is used in digital fabrication processes by creating objects with high-resolution surface variations to influence a user’s tactile perception. As the design of such surface haptics commonly relies on knowledge from real-life experiences, it is unclear how to adapt this information for digital design methods. In this work, we investigate replicating the haptics of real materials. Using an existing process for capturing an object’s microgeometry, we digitize and reproduce the stable surface information of a set of 15 fabric samples. In a psychophysical experiment, we evaluate the tactile qualities of our set of original samples and their replicas. From our results, we see that direct reproduction of surface variations is able to influence different psychophysical dimensions of the tactile perception of surface textures. While the fabrication process did not preserve all properties, our approach underlines that replication of surface microgeometries benefits fabrication methods in terms of haptic perception by covering a large range of tactile variations. Moreover, by changing the surface structure of a single fabricated material, its material perception can be influenced. We conclude by proposing strategies for capturing and reproducing digitized textures to better resemble the perceived haptics of the originals.

@article{degraen2021capturing,

author = {Degraen, Donald and Piovar{\v{c}}i, Michal and Bickel, Bernd and Kr{\"u}ger, Antonio},

title = {Capturing Tactile Properties of Real Surfaces for Haptic Reproduction},

booktitle = {The 34th Annual ACM Symposium on User Interface Software and Technology},

pages = {954--971},

year = {2021}

}

ACM Transactions on Graphics 40

Material appearance hinges on material reflectance properties but also surface geometry and illumination. The unlimited number of potential combinations between these factors makes understanding and predicting material appearance a very challenging task. In this work, we collect a large-scale dataset of perceptual ratings of appearance attributes with more than 215,680 responses for 42,120 distinct combinations of material, shape, and illumination. The goal of this dataset is twofold. First, we analyze for the first time the effects of illumination and geometry in material perception across such a large collection of varied appearances. We connect our findings to those of the literature, discussing how previous knowledge generalizes across very diverse materials, shapes, and illuminations. Second, we use the collected dataset to train a deep learning architecture for predicting perceptual attributes that correlate with human judgments. We demonstrate the consistent and robust behavior of our predictor in various challenging scenarios, which, for the first time, enables estimating perceived material attributes from general 2D images. Since the predictor relies on the final appearance in an image, it can compare appearance properties across different geometries and illumination conditions. Finally, we demonstrate several applications that use our predictor, including appearance reproduction using 3D printing, BRDF editing by integrating our predictor in a differentiable renderer, illumination design, or material recommendations for scene design.

@article{Serrano2021,

author = { Ana Serrano and Bin Chen and Chao Wang and Michal Piovar\v{c}i and Hans-Peter Seidel and Piotr Didyk and Karol Myszkowski},

title = {The effect of shape and illumination on material perception: model and applications},

journal = {ACM Trans. on Graph.},

volume = {40},

number = {4},

year = {2021},

}

ACM Transactions on Graphics (Siggraph 2021)

Elastic bending of initially flat slender elements allows the realization and economic fabrication of intriguing curved shapes. In this work, we derive an intuitive but rigorous geometric characterization of the design space of plane elastic rods with variable stiffness. It enables designers to determine which shapes are physically viable with active bending by visual inspection alone. Building on these insights, we propose a method for efficiently designing the geometry of a flat elastic rod that realizes a target equilibrium curve, which only requires solving a linear program. We implement this method in an interactive computational design tool that gives feedback about the feasibility of a design, and computes the geometry of the structural elements necessary to realize it within an instant. The tool also offers an iterative optimization routine that improves the fabricability of a model while modifying it as little as possible. In addition, we use our geometric characterization to derive an algorithm for analyzing and recovering the stability of elastic curves that would otherwise snap out of their unstable equilibrium shapes by buckling. We show the efficacy of our approach by designing and manufacturing several physical models that are assembled from flat elements.

@article{hafner2021tdsopec,

author = {Hafner, Christian and Bickel, Bernd},

title = {The Design Space of Plane Elastic Curves},

journal = {ACM Transactions on Graphics (TOG)},

number = {4},

volume = {40},

year = {2021},

publisher = {ACM}

}

ACM Transactions on Graphics 40(5) (Presented at SIGGRAPH Asia 2021)

This paper presents a method for designing planar multistable compliant structures. Given a sequence of desired stable states and the corresponding poses of the structure, we identify the topology and geometric realization of a mechanism—consisting of bars and joints—that is able to physically reproduce the desired multistable behavior. In order to solve this problem efficiently, we build on insights from minimally rigid graph theory to identify simple but effective topologies for the mechanism. We then optimize its geometric parameters, such as joint positions and bar lengths, to obtain correct transitions between the given poses. Simultaneously, we ensure adequate stability of each pose based on an effective approximate error metric related to the elastic energy Hessian of the bars in the mechanism. As demonstrated by our results, we obtain functional multistable mechanisms of manageable complexity that can be fabricated using 3D printing. Further, we evaluated the effectiveness of our method on a large number of examples in the simulation and fabricated several physical prototypes.

@article{zhang2021multistable,

author = {Zhang, Ran and Auzinger, Thomas and Bickel, Bernd},

title = {Computational Design of Planar Multistable Compliant Structures},

journal = {ACM Transactions on Graphics (TOG)},

volume = {40},

number = {5},

pages = {1--16},

year = {2021},

publisher = {ACM}

}

ACM Transactions on Graphics 40(4) (SIGGRAPH 2021)

Photorealistic editing of head portraits is a challenging task as humans are very sensitive to inconsistencies in faces. We present an approach for high-quality intuitive editing of the camera viewpoint and scene illumination (parameterised with an environment map) in a portrait image. This requires our method to capture and control the full reflectance field of the person in the image. Most editing approaches rely on supervised learning using training data captured with setups such as light and camera stages. Such datasets are expensive to acquire, not readily available and do not capture all the rich variations of in-the-wild portrait images. In addition, most supervised approaches only focus on relighting, and do not allow camera viewpoint editing. Thus, they only capture and control a subset of the reflectance field. Recently, portrait editing has been demonstrated by operating in the generative model space of StyleGAN. While such approaches do not require direct supervision, there is a significant loss of quality when compared to the supervised approaches. In this paper, we present a method which learns from limited supervised training data. The training images only include people in a fixed neutral expression with eyes closed, without much hair or background variations. Each person is captured under 150 one-light-at-a-time conditions and under 8 camera poses. Instead of training directly in the image space, we design a supervised problem which learns transformations in the latent space of StyleGAN. This combines the best of supervised learning and generative adversarial modeling. We show that the StyleGAN prior allows for generalisation to different expressions, hairstyles and backgrounds. This produces high-quality photorealistic results for in-the-wild images and significantly outperforms existing methods. Our approach can edit the illumination and pose simultaneously, and runs at interactive rates.

@article{mallikarjun2021photoapp,

title={PhotoApp: Photorealistic appearance editing of head portraits},

author={Mallikarjun, BR and Tewari, Ayush and Dib, Abdallah and Weyrich, Tim and Bickel, Bernd and Seidel, Hans Peter and Pfister, Hanspeter and Matusik, Wojciech and Chevallier, Louis and Elgharib, Mohamed A and others},

journal={ACM Transactions on Graphics},

volume={40},

number={4},

year={2021}

}

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition

The reflectance field of a face describes the reflectance properties responsible for complex lighting effects including diffuse, specular, inter-reflection and self shadowing. Most existing methods for estimating the face reflectance from a monocular image assume faces to be diffuse with very few approaches adding a specular component. This still leaves out important perceptual aspects of reflectance as higher-order global illumination effects and self-shadowing are not modeled. We present a new neural representation for face reflectance where we can estimate all components of the reflectance responsible for the final appearance from a single monocular image. Instead of modeling each component of the reflectance separately using parametric models, our neural representation allows us to generate a basis set of faces in a geometric deformation-invariant space, parameterized by the input light direction, viewpoint and face geometry. We learn to reconstruct this reflectance field of a face just from a monocular image, which can be used to render the face from any viewpoint in any light condition. Our method is trained on a light-stage training dataset, which captures 300 people illuminated with 150 light conditions from 8 viewpoints. We show that our method outperforms existing monocular reflectance reconstruction methods, in terms of photorealism due to better capturing of physical premitives, such as sub-surface scattering, specularities, self-shadows and other higher-order effects.

@inproceedings{tewari2021monocular,

title={Monocular Reconstruction of Neural Face Reflectance Fields},

author={Tewari, Ayush and Oh, Tae-Hyun and Weyrich, Tim and Bickel, Bernd and Seidel, Hans-Peter and Pfister, Hanspeter and Matusik, Wojciech and Elgharib, Mohamed and Theobalt, Christian and others},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={4791--4800},

year={2021}

}

The Visual Computer 2021

The understanding of material appearance perception is a complex problem due to interactions between material reflectance, surface geometry, and illumination. Recently, Serrano et al. collected the largest dataset to date with subjective ratings of material appearance attributes, including glossiness, metallicness, sharpness and contrast of reflections. In this work, we make use of their dataset to investigate for the first time the impact of the interactions between illumination, geometry, and eight different material categories in perceived appearance attributes. After an initial analysis, we select for further analysis the four material categories that cover the largest range for all perceptual attributes: fabric, plastic, ceramic, and metal. Using a cumulative link mixed model (CLMM) for robust regression, we discover interactions between these material categories and four representative illuminations and object geometries. We believe that our findings contribute to expanding the knowledge on material appearance perception and can be useful for many applications, such as scene design, where any particular material in a given shape can be aligned with dominant classes of illumination, so that a desired strength of appearance attributes can be achieved.

@article{Chen2021,

author = { Bin Chen and Chao Wang and Michal Piovar\v{c}i and Hans-Peter Seidel and Piotr Didyk and Karol Myszkowski and Ana Serrano},

title = {The effect of geometry and illumination on appearance perception of different material categories},

journal = {The Visual Computer},

volume = {37},

year = {2021},

}

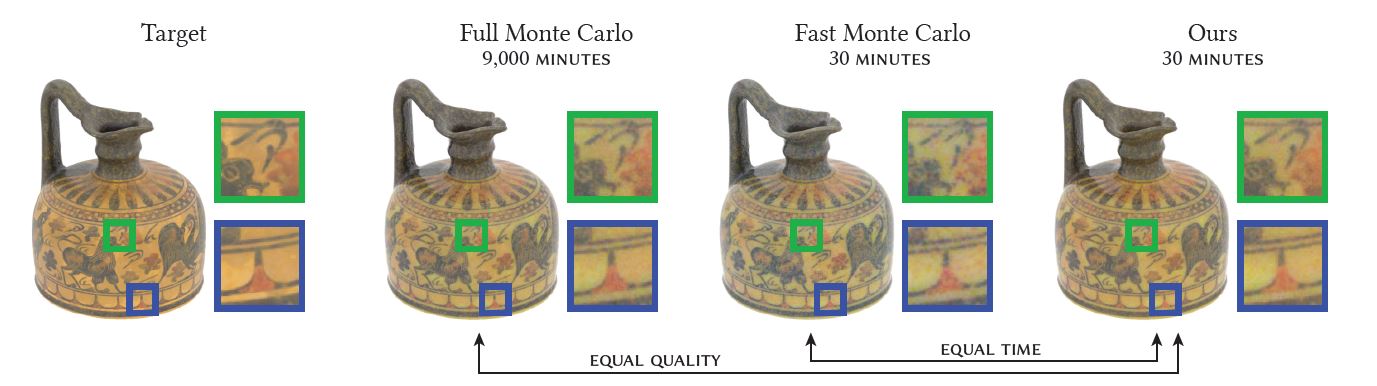

Computer Graphics Forum (Eurographics 2021)

With the wider availability of full-color 3D printers, color-accurate 3D-print preparation has received increased attention. A key challenge lies in the inherent translucency of commonly used print materials that blurs out details of the color texture. Previous work tries to compensate for these scattering effects through strategic assignment of colored primary materials to printer voxels. To date, the highest-quality approach uses iterative optimization that relies on computationally expensive Monte Carlo light transport simulation to predict the surface appearance from subsurface scattering within a given print material distribution; that optimization, however, takes in the order of days on a single machine. In our work, we dramatically speed up the process by replacing the light transport simulation with a data-driven approach. Leveraging a deep neural network to predict the scattering within a highly heterogeneous medium, our method performs around two orders of magnitude faster than Monte Carlo rendering while yielding optimization results of similar quality level. The network is based on an established method from atmospheric cloud rendering, adapted to our domain and extended by a physically motivated weight sharing scheme that substantially reduces the network size. We analyze its performance in an end-to-end print preparation pipeline and compare quality and runtime to alternative approaches, and demonstrate its generalization to unseen geometry and material values. This for the first time enables full heterogenous material optimization for 3D-print preparation within time frames in the order of the actual printing time.

@article{rittig2021neural,

title={Neural Acceleration of Scattering-Aware Color 3D Printing},

author={Rittig, Tobias and Sumin, Denis and Babaei, Vahid and Didyk, Piotr and Voloboy, Alexey and Wilkie, Alexander and Bickel, Bernd and Myszkowski, Karol and Weyrich, Tim and K{\v{r}}iv{\'a}nek, Jaroslav},

booktitle={Computer Graphics Forum},

volume={40},

number={2},

pages={205--219},

year={2021},

organization={Wiley Online Library}

}

Volumetric light transport is a pervasive physical phenomenon, and therefore its accurate simulation is important for a broad array of disciplines. While suitable mathematical models for computing the transport are now available, obtaining the necessary material parameters needed to drive such simulations is a challenging task: direct measurements of these parameters from material samples are seldom possible. Building on the inverse scattering paradigm, we present a novel measurement approach which indirectly infers the transport parameters from extrinsic observations of multiple-scattered radiance. The novelty of the proposed approach lies in replacing structured illumination with a structured reflector bonded to the sample, and a robust fitting procedure that largely compensates for potential systematic errors in the calibration of the setup. We show the feasibility of our approach by validating simulations of complex 3D compositions of the measured materials against physical prints, using photo-polymer resins. As presented in this paper, our technique yields colorspace data suitable for accurate appearance reproduction in the area of 3D printing. Beyond that, and without fundamental changes to the basic measurement methodology, it could equally well be used to obtain spectral measurements that are useful for other application areas.

@article{Elek:21,

author = {Oskar Elek and Ran Zhang and Denis Sumin and Karol Myszkowski and Bernd Bickel and Alexander Wilkie and Jaroslav K\v{r}iv\'{a}nek and Tim Weyrich},

journal = {Opt. Express},

keywords = {Illumination design; Inverse scattering; Medical imaging; Optical properties; Scattering media; Structured illumination microscopy},

number = {5},

pages = {7568--7588},

publisher = {OSA},

title = {Robust and practical measurement of volume transport parameters in solid photo-polymer materials for 3D printing},

volume = {29},

month = {Mar},

year = {2021},

url = {http://www.osapublishing.org/oe/abstract.cfm?URI=oe-29-5-7568},

doi = {10.1364/OE.406095}

}

ACM Transactions on Graphics (Siggraph Asia 2020)

Cold bent glass is a promising and cost-efficient method for realizing doubly curved glass façades. They are produced by attaching planar glass sheets to curved frames and must keep the occurring stress within safe limits. However, it is very challenging to navigate the design space of cold bent glass panels because of the fragility of the material, which impedes the form finding for practically feasible and aesthetically pleasing cold bent glass façades. We propose an interactive, data-driven approach for designing cold bent glass façades that can be seamlessly integrated into a typical architectural design pipeline. Our method allows non-expert users to interactively edit a parametric surface while providing real-time feedback on the deformed shape and maximum stress of cold bent glass panels. The designs are automatically refined to minimize several fairness criteria, while maximal stresses are kept within glass limits. We achieve interactive frame rates by using a differentiable Mixture Density Network trained from more than a million simulations. Given a curved boundary, our regression model is capable of handling multistable configurations and accurately predicting the equilibrium shape of the panel and its corresponding maximal stress. We show that the predictions are highly accurate and validate our results with a physical realization of a cold bent glass surface.

@article{Gavriil2020,

author = {Gavriil, Konstantinos and Guseinov, Ruslan and P{\'e}rez, Jes{\'u}s and Pellis, Davide and Henderson, Paul and Rist, Florian and Pottmann, Helmut and Bickel, Bernd},

title = {Computational Design of Cold Bent Glass Fa{\c c}ades},

journal = {ACM Transactions on Graphics (SIGGRAPH Asia 2020)},

year = {2020},

month = {12},

volume = {39},

number = {6}

articleno = {208},

numpages = {16}

}

Fabrication of curved shells plays an important role in modern design, industry, and science. Among their remarkable properties are, for example, aesthetics of organic shapes, ability to evenly distribute loads, or efficient flow separation. They find applications across vast length scales ranging from sky-scraper architecture to microscopic devices. But, at the same time, the design of curved shells and their manufacturing process pose a variety of challenges. In this thesis, they are addressed from several perspectives. In particular, this thesis presents approaches based on the transformation of initially flat sheets into the target curved surfaces. This involves problems of interactive design of shells with nontrivial mechanical constraints, inverse design of complex structural materials, and data-driven modeling of delicate and time-dependent physical properties. At the same time, two ewly-developed self-morphing mechanisms targeting flat-to-curved transformation are presented.

In architecture, doubly curved surfaces can be realized as cold bent glass panelizations. Originally flat glass panels are bent into frames and remain stressed. This is a cost-efficient fabrication approach compared to hot bending, when glass panels are shaped plastically. However such constructions are prone to breaking during bending, and it is highly nontrivial to navigate the design space, keeping the panels fabricable and aesthetically pleasing at the same time. We introduce an interactive design system for cold bent glass façades, while previously even offline optimization for such scenarios has not been sufficiently developed. Our method is based on a deep learning approach providing quick and high precision estimation of glass panel shape and stress while handling the shape multimodality.

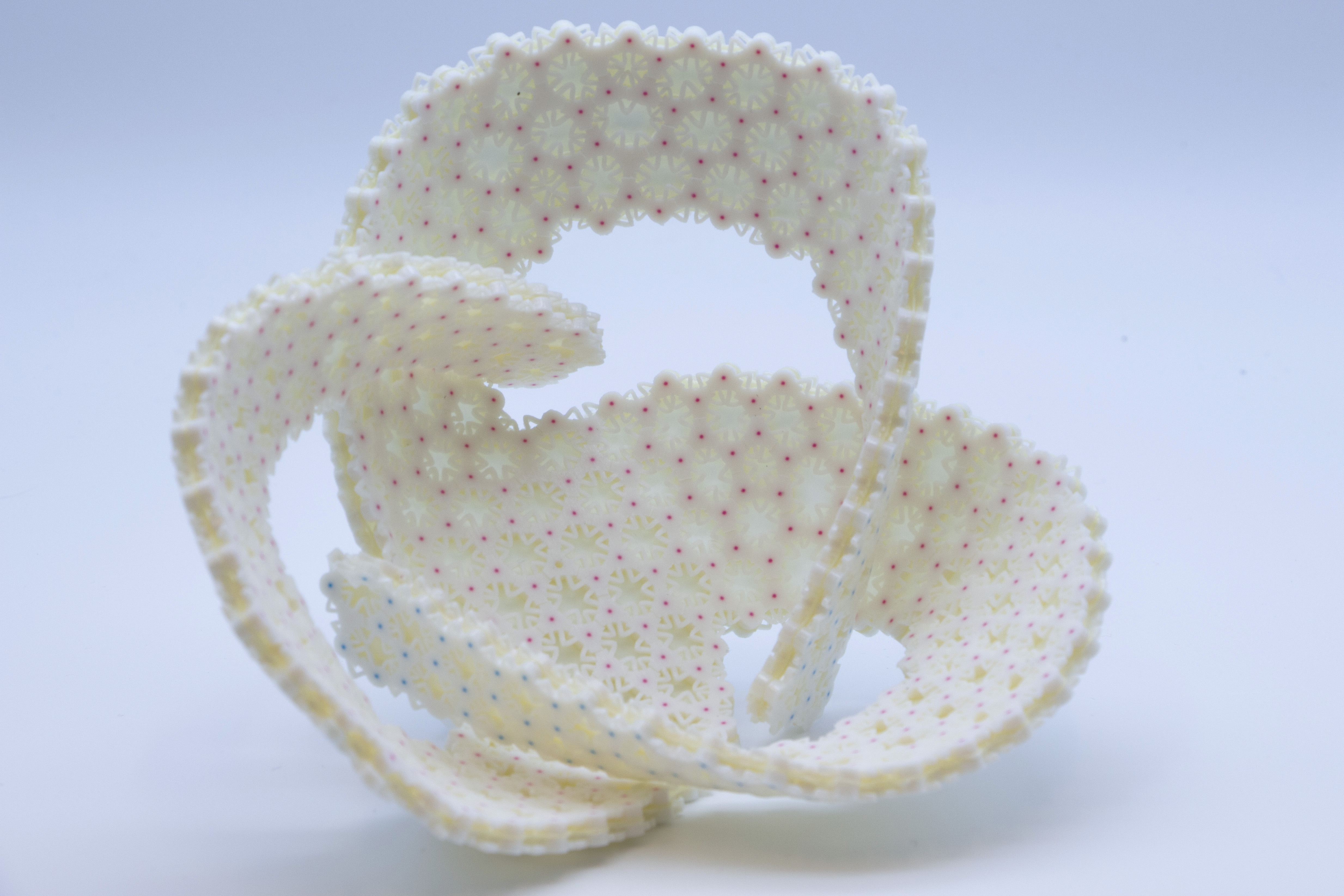

Fabrication of smaller objects of scales below 1 m, can also greatly benefit from shaping originally flat sheets. In this respect, we designed new self-morphing shell mechanisms transforming from an initial flat state to a doubly curved state with high precision and detail. Our so-called CurveUps demonstrate the encodement of the geometric information into the shell. Furthermore, we explored the frontiers of programmable materials and showed how temporal information can additionally be encoded into a flat shell. This allows prescribing deformation sequences for doubly curved surfaces and, thus, facilitates self-collision avoidance enabling complex shapes and functionalities otherwise impossible. Both of these methods include inverse design tools keeping the user in the design loop.

@phdthesis{GuseinovCDoCTS2020,

author = {Guseinov, Ruslan},

title = {Computational design of curved thin shells: from glass façades to programmable matter},

school = {IST Austria},

year = {2020},

month = {9}

}

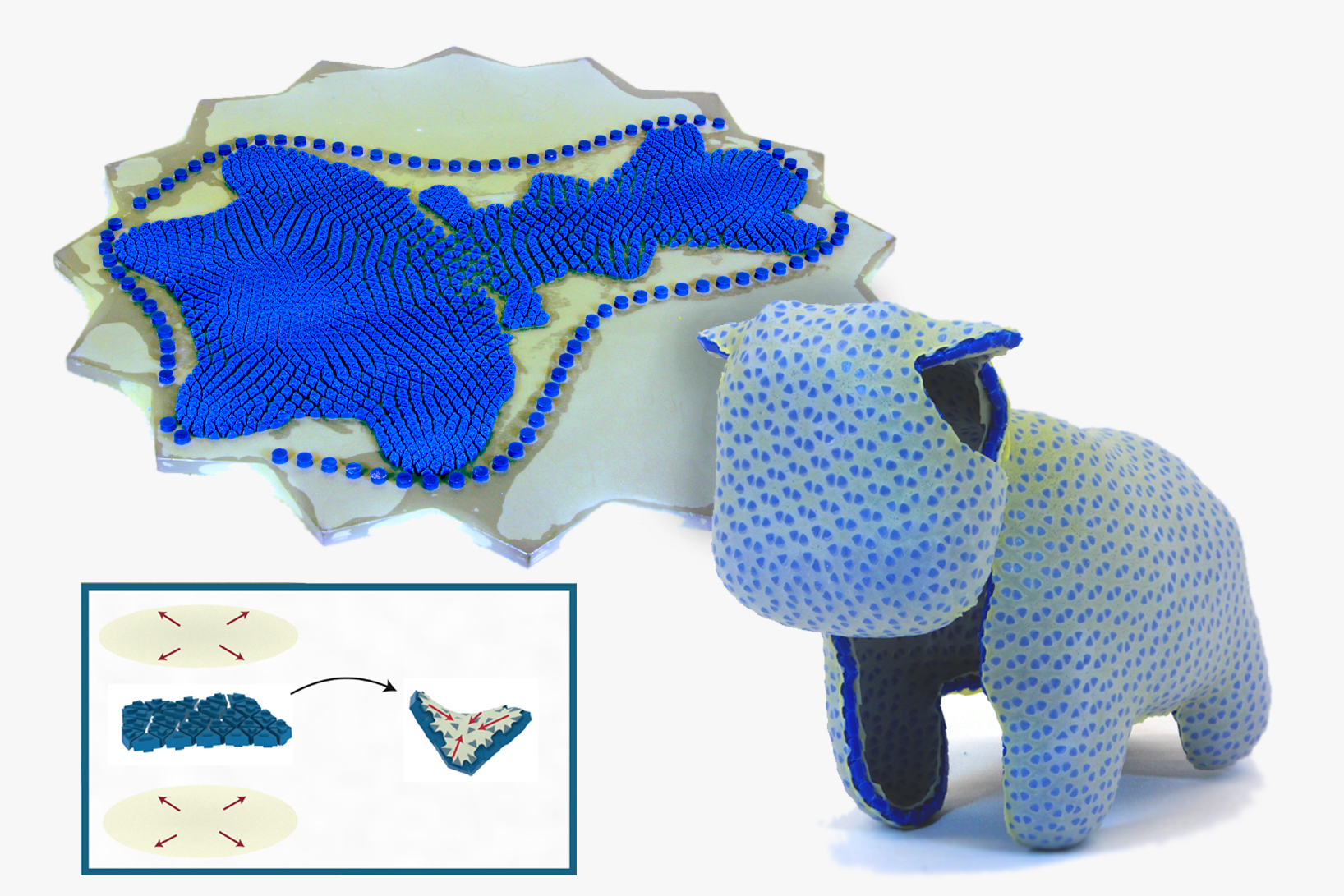

Advances in shape-morphing materials, such as hydrogels, shape-memory polymers and light-responsive polymers have enabled prescribing self-directed deformations of initially flat geometries. However, most proposed solutions evolve towards a target geometry without considering time-dependent actuation paths. To achieve more complex geometries and avoid self-collisions, it is critical to encode a spatial and temporal shape evolution within the initially flat shell. Recent realizations of time-dependent morphing are limited to the actuation of few, discrete hinges and cannot form doubly curved surfaces. Here, we demonstrate a method for encoding temporal shape evolution in architected shells that assume complex shapes and doubly curved geometries. The shells are non-periodic tessellations of pre-stressed contractile unit cells that soften in water at rates prescribed locally by mesostructure geometry. The ensuing midplane contraction is coupled to the formation of encoded curvatures. We propose an inverse design tool based on a data-driven model for unit cells’ temporal responses.

@article{Guseinov2020,

author={Guseinov, Ruslan and McMahan, Connor and P{\'e}rez, Jes{\'u}s and Daraio, Chiara and Bickel, Bernd},

title={Programming temporal morphing of self-actuated shells},

journal={Nature Communications},

year={2020},

volume={11},

number={1},

pages={237},

issn={2041-1723},

doi={10.1038/s41467-019-14015-2},

url={https://doi.org/10.1038/s41467-019-14015-2}

}

IEEE Transactions on Visualization and Computer Graphics 27(6)

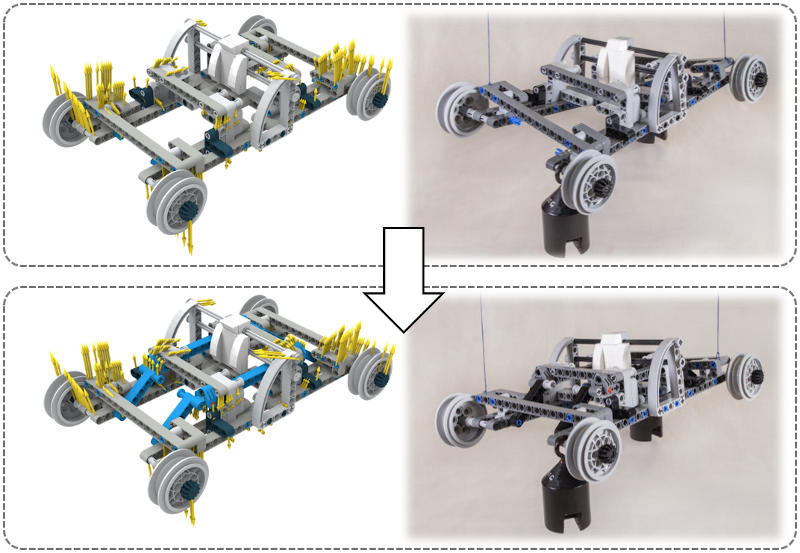

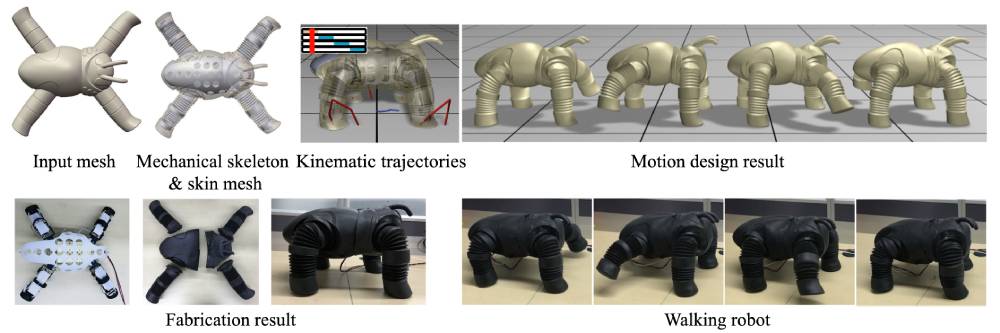

We present a computational design system that assists users to model, optimize, and fabricate quad-robots with soft skins.Our system addresses the challenging task of predicting their physical behavior by fully integrating the multibody dynamics of the mechanical skeleton and the elastic behavior of the soft skin. The developed motion control strategy uses an alternating optimization scheme to avoid expensive full space time-optimization, interleaving space-time optimization for the skeleton and frame-by-frame optimization for the full dynamics. The output are motor torques to drive the robot to achieve a user prescribed motion trajectory.We also provide a collection of convenient engineering tools and empirical manufacturing guidance to support the fabrication of the designed quad-robot. We validate the feasibility of designs generated with our system through physics simulations and with a physically-fabricated prototype.

@article{alderighi2021volume,

author = {Feng, Xudong and Liu, Jiafeng and Wang, Huamin and Yang, Yin and Bao, Hujun and Bickel, Bernd and Xu, Weiwei},

title = {Computational Design of Skinned Quad-Robots},

journal = {IEEE Transactions on Visualization and Computer Graphics},

number = {6},

volume = {27},

year = {2019},

publisher = {IEEE}

}

ACM Transactions on Graphics 38(6) (SIGGRAPH Asia 2019)

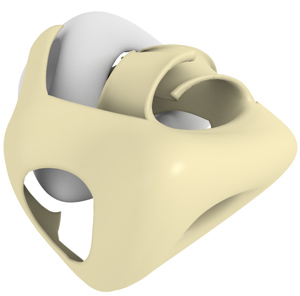

We propose a novel generic shape optimization method for CAD models based on the eXtended Finite Element Method (XFEM). Our method works directly on the intersection between the model and a regular simulation grid, without the need to mesh or remesh, thus removing a bottleneck of classical shape optimization strategies. This is made possible by a novel hierarchical integration scheme that accurately integrates finite element quantities with sub-element precision. For optimization, we efficiently compute analytical shape derivatives of the entire framework, from model intersection to in- tegration rule generation and XFEM simulation. Moreover, we describe a differentiable projection of shape parameters onto a constraint manifold spanned by user-specified shape preservation, consistency, and manufactura- bility constraints. We demonstrate the utility of our approach by optimizing mass distribution, strength-to-weight ratio, and inverse elastic shape design objectives directly on parameterized 3D CAD models.

@article{Hafner:2019,

author = {Hafner, Christian and Schumacher, Christian and Knoop, Espen and Auzinger, Thomas and Bickel, Bernd and B\"{a}cher, Moritz},

title = {X-CAD: Optimizing CAD Models with Extended Finite Elements},

journal = {ACM Trans. Graph.},

issue_date = {November 2019},

volume = {38},

number = {6},

month = nov,

year = {2019},

issn = {0730-0301},

pages = {157:1--157:15},

articleno = {157},

numpages = {15},

url = {http://doi.acm.org/10.1145/3355089.3356576},

doi = {10.1145/3355089.3356576},

acmid = {3356576},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {CAD processing, XFEM, shape optimization, simulation},

}

FORM and FORCE, IASS Symposium 2019, Structural Membranes 2019

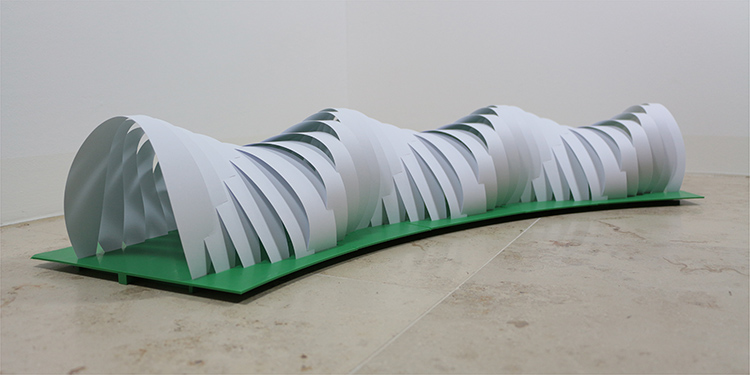

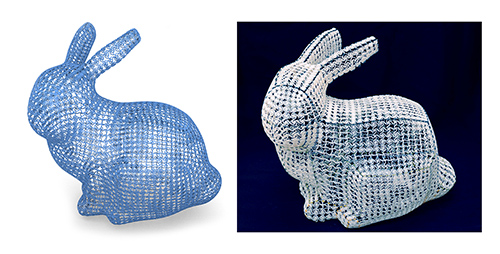

Bending-active structures are able to efficiently produce complex curved shapes starting from flat panels. The desired deformation of the panels derives from the proper selection of their elastic properties. Optimized panels, called FlexMaps, are designed such that, once they are bent and assembled, the resulting static equilibrium configuration matches a desired input 3D shape. The FlexMaps elastic properties are controlled by locally varying spiraling geometric mesostructures, which are optimized in size and shape to match the global curvature (i.e., bending requests) of the target shape. The design pipeline starts from a quad mesh representing the input 3D shape, which determines the edge size and the total amount of spirals: every quad will embed one spiral. Then, an optimization algorithm tunes the geometry of the spirals by using a simplified pre-computed rod model. This rod model is derived from a non-linear regression algorithm which approximates the non-linear behavior of solid FEM spiral models subject to hundreds of load combinations. This innovative pipeline has been applied to the project of a lightweight plywood pavilion named FlexMaps Pavilion, which is a single-layer piecewise twisted arc that fits a bounding box of 3.90x3.96x3.25 meters.

@InProceedings\{LMPPPBC19,

author = "Laccone, Francesco and Malomo, Luigi and P\'erez, Jes\'us and Pietroni, Nico and Ponchio, Federico and Bickel, Bernd and Cignoni, Paolo",

title = "FlexMaps Pavilion: a twisted arc made of mesostructured flat flexible panels",

booktitle = "FORM and FORCE, IASS Symposium 2019, Structural Membranes 2019",

pages = "498-504",

month = "oct",

year = "2019",

editor = "Carlos L\'azaro, Kai-Uwe Bletzinger, Eugenio O\~{n}ate",

publisher = "International Centre for Numerical Methods in Engineering (CIMNE)",

url = "http://vcg.isti.cnr.it/Publications/2019/LMPPPBC19"

}

ACM Transactions on Graphics 38(4) (SIGGRAPH 2019)

Commercially available full-color 3D printing allows for detailed control of material deposition in a volume, but an exact reproduction of a target surface appearance is hampered by the strong subsurface scattering that causes nontrivial volumetric cross-talk at the print surface. Previous work showed how an iterative optimization scheme based on accumulating absorptive materials at the surface can be used to find a volumetric distribution of print materials that closely approximates a given target appearance. In this work, we first revisit the assumption that pushing the absorptive materials to the surface results in minimal volumetric cross-talk. We design a full-fledged optimization on a small domain for this task and confirm this previously reported heuristic. Then, we extend the above approach that is critically limited to color reproduction on planar surfaces, to arbitrary 3D shapes. Our method enables high-fidelity color texture reproduction on 3D prints by effectively compensating for internal light scattering within arbitrarily shaped objects. In addition, we propose a content-aware gamut mapping that significantly improves color reproduction for the pathological case of thin geometric features. Using a wide range of sample objects with complex textures and geometries, we demonstrate color reproduction whose fidelity is superior to state-of-the-art drivers for color 3D printers.

@article{sumin19geometry-aware,

author = {Sumin, Denis and Rittig, Tobias and Babaei, Vahid and Nindel, Thomas and Wilkie, Alexander and Didyk, Piotr and Bickel, Bernd and K\v{r}iv\'anek, Jaroslav and Myszkowski, Karol and Weyrich, Tim},

title = {Geometry-Aware Scattering Compensation for {3D} Printing},

journal = {ACM Transactions on Graphics (Proc. SIGGRAPH)},

year = 2019,

month = jul,

volume = 38,

numpages = 14,

keywords = {computational fabrication, appearance reproduction, appearance enhancement, sub-surface light transport, volu- metric optimization, gradient rendering},

}

ACM Transactions on Graphics 38(4) (SIGGRAPH 2019)

We propose a novel technique for the automatic design of molds to cast highly complex shapes. The technique generates composite, two-piece molds. Each mold piece is made up of a hard plastic shell and a flexible silicone part. Thanks to the thin, soft, and smartly shaped silicone part, which is kept in place by a hard plastic shell, we can cast objects of unprecedented complexity. An innovative algorithm based on a volumetric analysis defines the layout of the internal cuts in the silicone mold part. Our approach can robustly handle thin protruding features and intertwined topologies that have caused previous methods to fail. We compare our results with state of the art techniques, and we demonstrate the casting of shapes with extremely complex geometry.

@article{Alderighi:2019,

author = {Alderighi, Thomas and Malomo, Luigi and Giorgi, Daniela and Bickel, Bernd and Cignoni, Paolo and Pietroni, Nico},

title = {Volume-aware Design of Composite Molds},

journal = {ACM Trans. Graph.},

issue_date = {July 2019},

volume = {38},

number = {4},

month = jul,

year = {2019},

issn = {0730-0301},

pages = {110:1--110:12},

articleno = {110},

numpages = {12},

url = {http://doi.acm.org/10.1145/3306346.3322981},

doi = {10.1145/3306346.3322981},

acmid = {3322981},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {casting, fabrication, mold design},

}

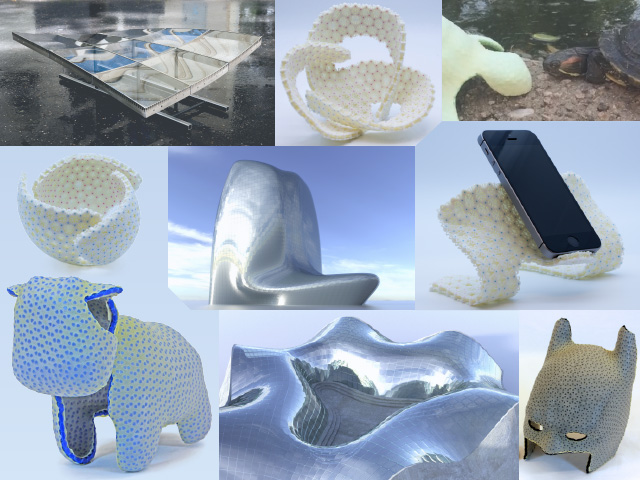

ACM Transactions on Graphics 37(6) (SIGGRAPH Asia 2018)

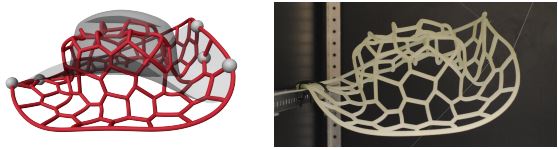

We propose FlexMaps, a novel framework for fabricating smooth shapes out of flat, flexible panels with tailored mechanical properties. We start by mapping the 3D surface onto a 2D domain as in traditional UV mapping to design a set of deformable flat panels called FlexMaps. For these panels, we design and obtain specific mechanical properties such that, once they are assembled, the static equilibrium configuration matches the desired 3D shape. FlexMaps can be fabricated from an almost rigid material, such as wood or plastic, and are made flexible in a controlled way by using computationally designed spiraling microstructures.

@article{MPIPMCB18,

author = "Malomo, Luigi and Per\'ez, Jes\'us and Iarussi, Emmanuel and Pietroni, Nico and Miguel, Eder and Cignoni, Paolo and Bickel, Bernd",

title = "FlexMaps: Computational Design of Flat Flexible Shells for Shaping 3D Objects",

journal = "ACM Trans. on Graphics - Siggraph Asia 2018",

number = "6",

volume = "37",

pages = "14",

month = "dec",

year = "2018",

note = "https://doi.org/10.1145/3272127.3275076"

}

ACM Transactions on Graphics 37(4) (SIGGRAPH 2018)

Molding is a popular mass production method, in which the initial expenses for the mold are offset by the low per-unit production cost. However, the physical fabrication constraints of the molding technique commonly restrict the shape of moldable objects. For a complex shape, a decomposition of the object into moldable parts is a common strategy to address these constraints, with plastic model kits being a popular and illustrative example. However, conducting such a decomposition requires considerable expertise, and it depends on the technical aspects of the fabrication technique, as well as aesthetic considerations. We present an interactive technique to create such decompositions for two-piece molding, in which each part of the object is cast between two rigid mold pieces. Given the surface description of an object, we decompose its thin-shell equivalent into moldable parts by first performing a coarse decomposition and then utilizing an active contour model for the boundaries between individual parts. Formulated as an optimization problem, the movement of the contours is guided by an energy reflecting fabrication constraints to ensure the moldability of each part. Simultaneously the user is provided with editing capabilities to enforce aesthetic guidelines. Our interactive interface provides control of the contour positions by allowing, for example, the alignment of part boundaries with object features. Our technique enables a novel workflow, as it empowers novice users to explore the design space, and it generates fabrication-ready two-piece molds that can be used either for casting or industrial injection molding of free-form objects.

@article{Nakashima:2018:10.1145/3197517.3201341,

author = {Nakashima, Kazutaka and Auzinger, Thomas and Iarussi, Emmanuel and Zhang, Ran and Igarashi, Takeo and Bickel, Bernd},

title = {CoreCavity: Interactive Shell Decomposition for Fabrication with Two-Piece Rigid Molds},

journal = {ACM Transactions on Graphics (SIGGRAPH 2018)},

year = {2018},

volume = {37},

number = {4},

pages = {135:1--135:13},

articleno = {135},

numpages = {16},

url = {https://dx.doi.org/10.1145/3197517.3201341},

doi = {10.1145/3197517.3201341},

acmid = {3201341},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {molding, fabrication, height field, decomposition}

}

ACM Transactions on Graphics 37(4) (SIGGRAPH 2018)

Additive manufacturing has recently seen drastic improvements in resolution, making it now possible to fabricate features at scales of hundreds or even dozens of nanometers, which previously required very expensive lithographic methods. As a result, additive manufacturing now seems poised for optical applications, including those relevant to computer graphics, such as material design, as well as display and imaging applications.

In this work, we explore the use of additive manufacturing for generating structural colors, where the structures are designed using a fabrication-aware optimization process. This requires a combination of full-wave simulation, a feasible parameterization of the design space, and a tailored optimization procedure. Many of these components should be re-usable for the design of other optical structures at this scale.

We show initial results of material samples fabricated based on our designs. While these suffer from the prototype character of state-of-the-art fabrication hardware, we believe they clearly demonstrate the potential of additive nanofabrication for structural colors and other graphics applications.

@article{Auzinger:2018:10.1145/3197517.3201376,

author = {Auzinger, Thomas and Heidrich, Wolfgang and Bickel, Bernd},

title = {Computational Design of Nanostructural Color for Additive Manufacturing},

journal = {ACM Transactions on Graphics (SIGGRAPH 2018)},

year = {2018},

volume = {37},

number = {4},

pages = {159:1--159:16},

articleno = {159},

numpages = {16},

url = {http://doi.acm.org/10.1145/3197517.3201376},

doi = {10.1145/3197517.3201376},

acmid = {3201376},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {structural colorization, appearance, multiphoton lithography, direct laser writing, computational fabrication, computational design, shape optimization, FDTD, diffraction, Nanoscribe}

}

ACM Transactions on Graphics 37(4) (SIGGRAPH 2018)

We propose a new method for fabricating digital objects through reusable silicone molds. Molds are generated by casting liquid silicone into custom 3D printed containers called metamolds. Metamolds automatically define the cuts that are needed to extract the cast object from the silicone mold. The shape of metamolds is designed through a novel segmentation technique, which takes into account both geometric and topological constraints involved in the process of mold casting. Our technique is simple, does not require changing the shape or topology of the input objects, and only requires offthe-shelf materials and technologies. We successfully tested our method on a set of challenging examples with complex shapes and rich geometric detail.

@article{Alderighi:2018,

author = {Alderighi, Thomas and Malomo, Luigi and Giorgi, Daniela and Pietroni, Nico and Bickel, Bernd and Cignoni, Paolo},

title = {Metamolds: Computational Design of Silicone Molds},

journal = {ACM Trans. Graph.},

issue_date = {August 2018},

volume = {37},

number = {4},

month = jul,

year = {2018},

issn = {0730-0301},

pages = {136:1--136:13},

articleno = {136},

numpages = {13},

url = {http://doi.acm.org/10.1145/3197517.3201381},

doi = {10.1145/3197517.3201381},

acmid = {3201381},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {casting, fabrication, molding},

}

ACM Transactions on Graphics 37(4) (SIGGRAPH 2018)

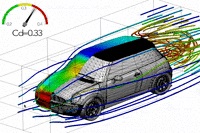

We present a data-driven technique to instantly predict how fluid flows around various three-dimensional objects. Such simulation is useful for computational fabrication and engineering, but is usually computationally expensive since it requires solving the Navier-Stokes equation for many time steps. To accelerate the process, we propose a machine learning framework which predicts aerodynamic forces and velocity and pressure fields given a threedimensional shape input. Handling detailed free-form three-dimensional shapes in a data-driven framework is challenging because machine learning approaches usually require a consistent parametrization of input and output. We present a novel PolyCube maps-based parametrization that can be computed for three-dimensional shapes at interactive rates. This allows us to efficiently learn the nonlinear response of the flow using a Gaussian process regression. We demonstrate the effectiveness of our approach for the interactive design and optimization of a car body.

@article{Umetani:2018,

author = {Umetani, Nobuyuki and Bickel, Bernd},

title = {Learning Three-dimensional Flow for Interactive Aerodynamic Design},

journal = {ACM Transactions on Graphics (SIGGRAPH 2018)},

year = {2018},

volume = {37},

number = {4},

articleno = {89},

numpages = {10},

url = {https://doi.org/10.1145/3197517.3201325},

doi = {10.1145/3197517.3201325},

publisher = {ACM},

address = {New York, NY, USA}

}

IEEE International Conference on Robotics and Automation 2018

In the context of robotic manipulation and grasping, the shift from a view that is static (force closure of a single posture) and contact-deprived (only contact for force closure is allowed, everything else is obstacle) towards a view that is dynamic and contact-rich (soft manipulation) has led to an increased interest in soft hands. These hands can easily exploit environmental constraints and object surfaces without risk, and safely interact with humans, but present also some challenges. Designing them is difficult, as well as predicting, modelling, and “programming” their interactions with the objects and the environment. This paper tackles the problem of simulating them in a fast and effective way, leveraging on novel and existing simulation technologies. We present a triple-layered simulation framework where dynamic properties such as stiffness are determined from slow but accurate FEM simulation data once, and then condensed into a lumped parameter model that can be used to fast simulate soft fingers and soft hands. We apply our approach to the simulation of soft pneumatic fingers.

@inproceedings{pozziefficient,

title = {Efficient FEM-Based Simulation of Soft Robots Modeled as Kinematic Chains},

booktitle = {IEEE International Conference on Robotics and Automation 2018},

author = {Pozzi, Maria and Miguel, Eder and Deimel, Raphael and Malvezzi, Monica and Bickel, Bernd and Brock, Oliver and Prattichizzo, Domenico},

year = {2018}

}

ACM Transactions on Graphics 36(6) (SIGGRAPH Asia 2017)

Color texture reproduction in 3D printing commonly ignores volumetric light transport (cross-talk) between surface points on a 3D print. Such light diffusion leads to significant blur of details and color bleeding, and is particularly severe for highly translucent resin-based print materials. Given their widely varying scattering properties, this cross-talk between surface points strongly depends on the internal structure of the volume surrounding each surface point. Existing scattering-aware methods use simplified models for light diffusion, and often accept the visual blur as an immutable property of the print medium. In contrast, our work counteracts heterogeneous scattering to obtain the impression of a crisp albedo texture on top of the 3D print, by optimizing for a fully volumetric material distribution that preserves the target appearance. Our method employs an efficient numerical optimizer on top of a general Monte-Carlo simulation of heterogeneous scattering, supported by a practical calibration procedure to obtain scattering parameters from a given set of printer materials. Despite the inherent translucency of the medium, we reproduce detailed surface textures on 3D prints. We evaluate our system using a commercial, five-tone 3D print process and compare against the printer’s native color texturing mode, demonstrating that our method preserves high-frequency features well without having to compromise on color gamut.

@article{ElekSumin2017SGA,

author = {Elek, Oskar and Sumin, Denis and Zhang, Ran and Weyrich, Tim and Myszkowski, Karol and Bickel, Bernd and Wilkie, Alexander and K\v{r}iv\'{a}nek, Jaroslav},

title = {Scattering-aware Texture Reproduction for 3{D} Printing},

journal = {ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia)},

volume = {36},

number = {6},

year = {2017},

pages = {241:1--241:15}

}

ACM Transactions on Graphics 36(4) (SIGGRAPH 2017)

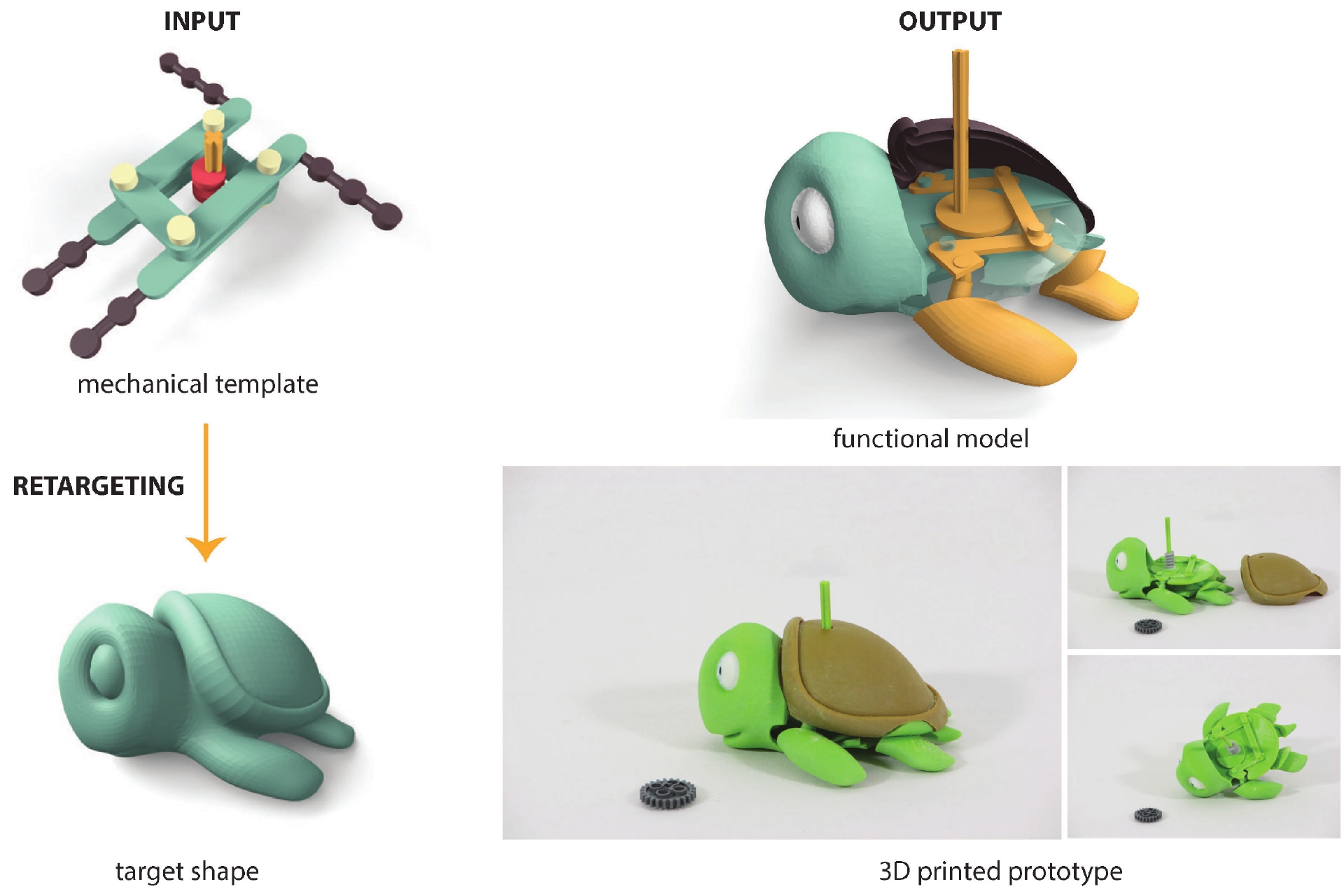

We present an interactive design system to create functional mechanical objects. Our computational approach allows novice users to retarget an existing mechanical template to a user-specified input shape. Our proposed representation for a mechanical template encodes a parameterized mechanism, mechanical constraints that ensure a physically valid configuration, spatial relationships of mechanical parts to the user-provided shape, and functional constraints that specify an intended functionality. We provide an intuitive interface and optimization-in-the-loop approach for finding a valid configuration of the mechanism and the shape to ensure that higher-level functional goals are met. Our algorithm interactively optimizes the mechanism while the user manipulates the placement of mechanical components and the shape. Our system allows users to efficiently explore various design choices and to synthesize customized mechanical objects that can be fabricated with rapid prototyping technologies. We demonstrate the efficacy of our approach by retargeting various mechanical templates to different shapes and fabricating the resulting functional mechanical objects.

@article{Zhang2017,

author = {Zhang, Ran and Auzinger, Thomas and Ceylan, Duygu and Li, Wilmot and Bickel, Bernd},

title = {Functionality-aware Retargeting of Mechanisms to 3D Shapes},

journal = {ACM Transactions on Graphics (SIGGRAPH 2017)},

year = {2017},

volume = {36},

number = {4}

}

ACM Transactions on Graphics 36(4) (SIGGRAPH 2017)

We present a computational approach for designing CurveUps, curvy shells that form from an initially flat state. They consist of small rigid tiles that are tightly held together by two pre-stretched elastic sheets attached to them. Our method allows the realization of smooth, doubly curved surfaces that can be fabricated as a flat piece. Once released, the restoring forces of the pre-stretched sheets support the object to take shape in 3D. CurveUps are structurally stable in their target configuration. The design process starts with a target surface. Our method generates a tile layout in 2D and optimizes the distribution, shape, and attachment areas of the tiles to obtain a configuration that is fabricable and in which the curved up state closely matches the target. Our approach is based on an efficient approximate model and a local optimization strategy for an otherwise intractable nonlinear optimization problem. We demonstrate the effectiveness of our approach for a wide range of shapes, all realized as physical prototypes.

@article{Guseinov2017,

author = {Guseinov, Ruslan and Miguel, Eder and Bickel, Bernd},

title = {CurveUps: Shaping Objects from Flat Plates with Tension-Actuated Curvature},

year = {2017},

volume = {36},

number = {4}

journal = {ACM Trans. Graph.},

month = {7},

articleno = {64},

numpages = {12},

doi = {10.1145/3072959.3073709},

}

ACM Transactions on Graphics (SIGGRAPH Asia 2016)